Dataset Nodes

1. Overview

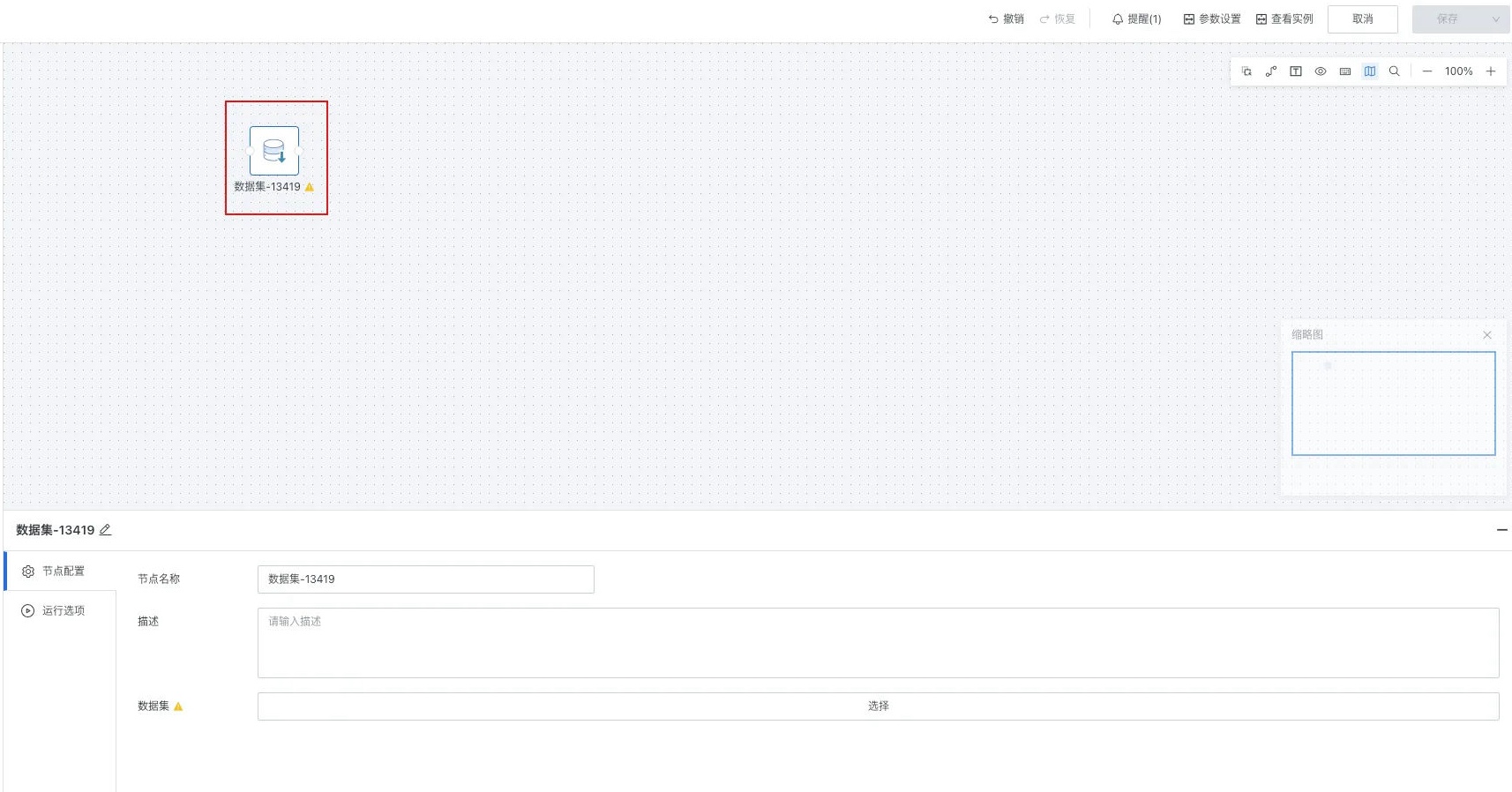

After entering the offline development task editing page, you can drag the "Dataset" node from the left side into the canvas and configure it.

This chapter details the configuration items of dataset nodes.

2. Node Configuration

After dragging the [Dataset] node in the task type, click the node to configure the current task node. Node configuration includes node name, description and dataset. Clear basic information configuration helps to find and understand node purposes faster in future workflow management processes.

-

Dataset: Select from the dataset list.

-

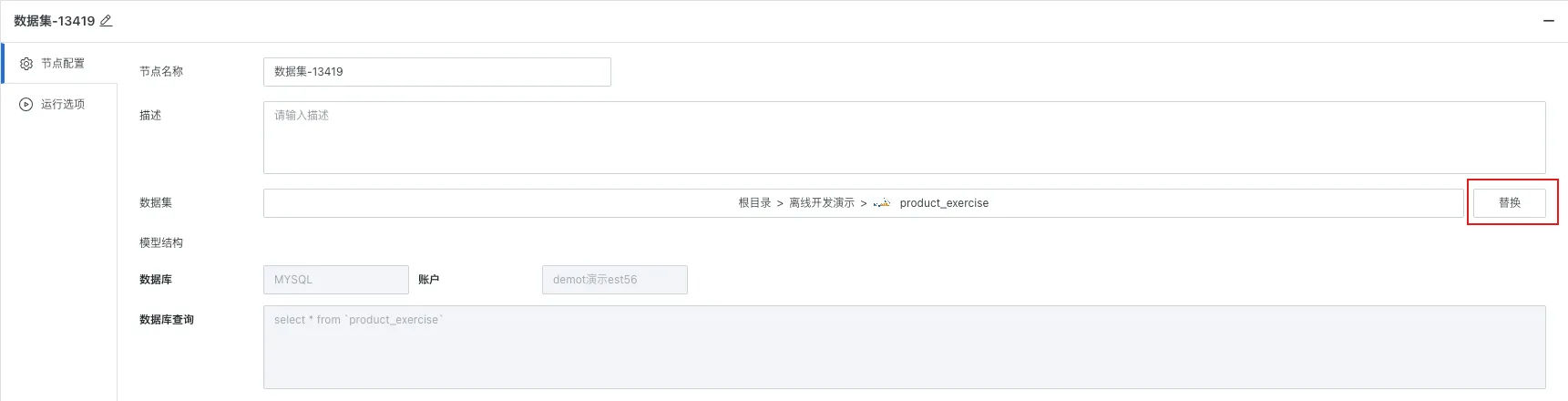

After dataset selection, supports viewing/replacing datasets in the node.

- View dataset: Click the dataset name to jump to the dataset overview page.

- Replace dataset: Click the "Replace" button to the right of "Dataset" to re-specify the dataset as the scheduling object of the workflow.

Note

-

It is necessary to ensure that the workflow owner has owner permissions for the target dataset, and the target dataset is not referenced by other workflows before selection/replacement can be performed.

-

After dataset operator configuration is completed, task running order orchestration can be performed through connection relationships, also supporting success, failure, and sequential scheduling.

-

When the workflow runs, when the "Dataset" node's predecessor dependency conditions are met or there are no dependency conditions, the node will run. During runtime, the referenced dataset update will be triggered, and the update logic is equivalent to the URL trigger update logic.

- If incremental update is enabled, pre-data cleaning and data appending will be performed according to the predecessor cleaning rules and incremental update SQL.

- If incremental update is not enabled, full update will be performed according to the model structure SQL.

-

After referencing the dataset in the workflow and saving, the original update strategy of the dataset will automatically become invalid and be changed to "Follow workflow update".

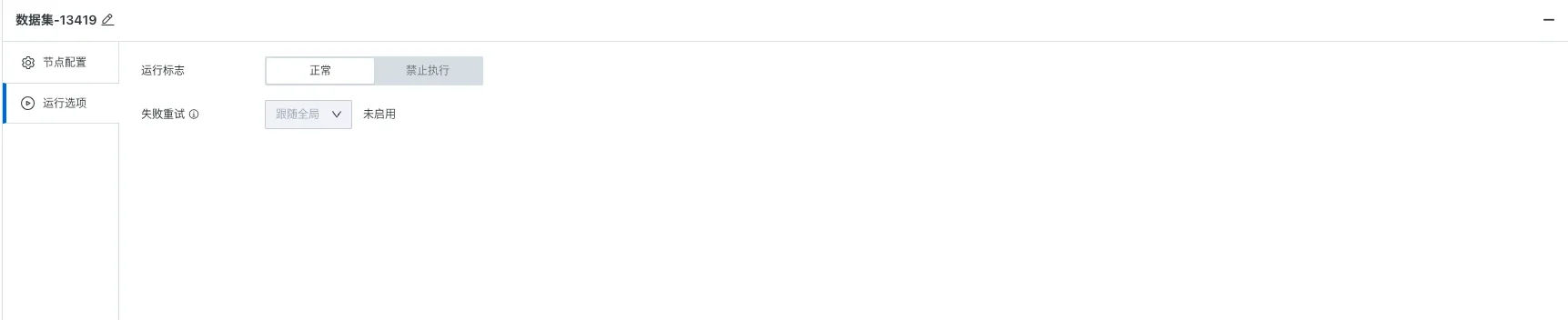

3. Running Options

- Running flag

- Prohibit execution: After the workflow runs to this node, it will directly skip execution, commonly used for temporary data problem troubleshooting, partial task running control and other scenarios.

- Normal: Run the node according to the existing scheduling strategy, the default running flag for nodes.

- Failure retry: Synchronized with the failure retry strategy configured by the dataset itself.