ETL Advanced Settings

1. ETL Advanced Settings Overview

This document introduces the functional description and specific operations of Smart ETL advanced settings.

2. ETL Advanced Settings Description

Operation Path: Data Preparation - Smart ETL - ETL Details Page - Advanced Settings;

Function Description: Four runtime parameters can be set, detailed below.

2.1 Parameter 1: ETL Intermediate Result Caching

When the ETL is relatively complex (at least two output datasets and exceeds the set complexity threshold), enabling this configuration will automatically cache intermediate calculation results to speed up the entire ETL run. In special cases, this feature can be disabled at the ETL level.

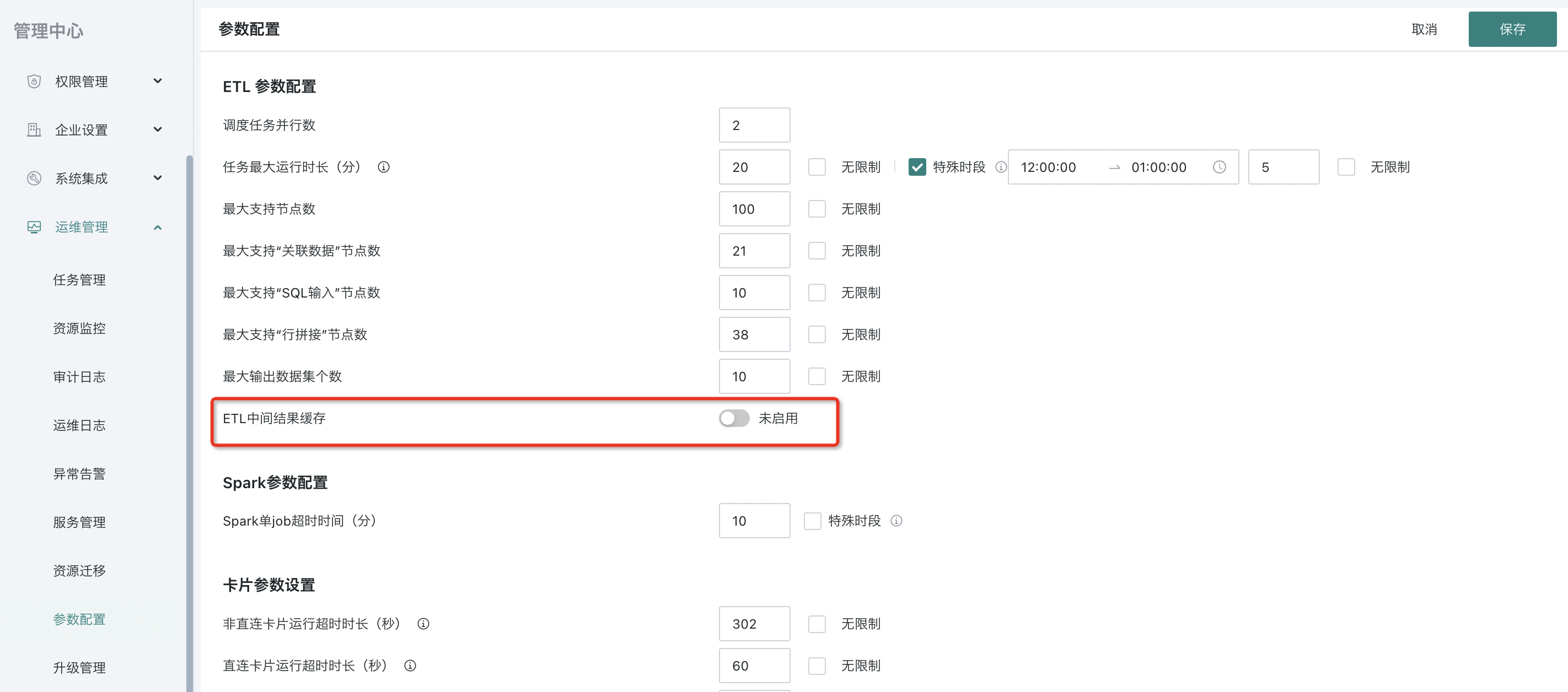

- Complexity threshold setting page path: Management Center - Operations Management - Parameter Configuration - ETL Parameter Configuration;

- The recommended default complexity threshold is 100. Setting it too low may cause more ETLs to automatically cache intermediate results during operation.

2.2 Parameter 2: BroadcastJoin

In the system, Spark is enabled by default. However, when both associated tables are large, it is not suitable to enable Spark's BroadcastJoin feature.

You can disable the "BroadcastJoin" option to reduce risk. For special cases, contact Guandata technical support to decide whether to enable it.

2.3 Parameter 3: Spark Single Job Timeout (minutes)

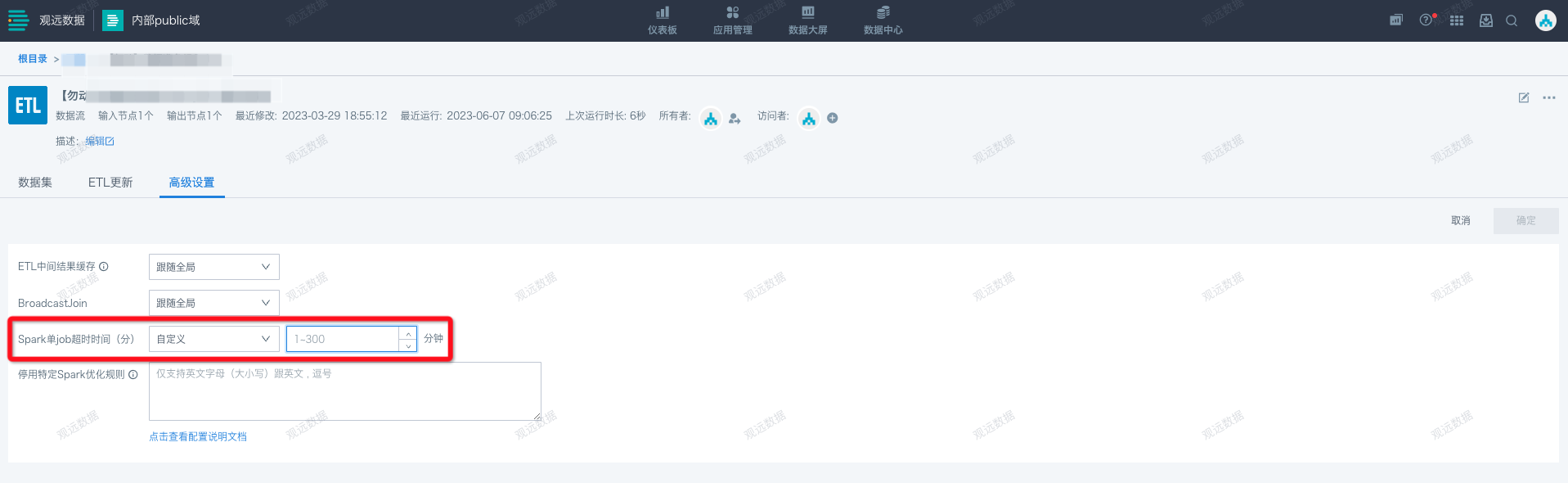

Provides more flexible and precise control for a specific ETL task. By setting the Spark run timeout, you can limit the task's execution time, ensuring it completes within a reasonable time frame, avoiding delays and resource waste, and improving data analysis and processing efficiency.

- Default is to follow global

- Custom duration range: 1-300 minutes;

2.4 Parameter 4

Set Spark Runtime Parameters (Effective from version 6.5)

The ETL computing engine has preset some Spark runtime rules. In extreme cases, some rules may cause ETL runs to fail. You can configure parameters and rules for these ETLs so that they use specific parameters during operation. Multiple rules are supported, separated by new lines.

Currently supported parameters:

- shuffleBytes

- guandata.jobLimit.shuffleBytes=200

- Unit: G Output dataset rows

- guandata.jobLimit.numOfOutputRows=1

- Unit: 100 million shuffleDisk threshold

- guandata.shuffleDisk.threshold=0.85 tableExpansionRate

- guandata.jobLimit.tableExpansionRate=100 broadcast join threshold

- spark.sql.autoBroadcastJoinThreshold=10Mb

Setting example:

If you are not familiar with specific parameter settings, do not configure them yourself. Please contact Guandata support in advance.

Disable Specific Spark Optimization Rules (Effective before version 6.5, not effective after 6.5)

The ETL computing engine has preset some Spark optimization rules, such as OptimizeRepartition, ColumnPruning, etc. In very special cases, some rules may cause ETL runs to fail. You can configure these ETLs to not use preset optimization rules during operation.

Multiple rules can be disabled at the same time, separated by commas, as shown below.

CombineUnions,ConstantPropagation

If you encounter problems during configuration, please contact Guandata support.