Error Description

1. Overview

This document introduces error messages in modules such as Guandata BI multi-source data integration, data preparation, data analysis, and visualization.

2. Multi-source Data Integration

2.1. Dataset

/guandata-store/table_cache/guanbi/XXX doesn't exist

Problem Description:

When connecting to a Hive database to create a dataset, the code can run and preview normally, but an error is reported: /guandata-store/table_cache/guanbi/XXX doesn't exist.

Cause of Error:

The dataset is still in the queue after creation and has not finished running.

Solution:

Just wait for the task to finish. Users often click preview and other operations before the dataset has finished running.

Field SKU is not unique in Record

Problem Description:

The database runs normally in Oracle, but when creating a dataset in Guandata, it reports: Field SKU is not unique in Record.

Cause of Error:

Guandata does not support fields with the same name. In Oracle Client, it is only for query and display, but in Guandata, it needs to be stored, so different names are required.

Solution:

Rename the fields.

Prepared statement needs to be re-prepared

Problem Description:

When creating a new dataset overview, it prompts "Database exception, please contact the database administrator, error details: Prepared statement needs to be re-prepared".

Cause of Error:

This is an error from the local database itself, related to the database cache size setting.

Solution:

Solve it by modifying the following parameters:

set global table_open_cache=16384;

set global table_definition_cache=16384;

column ambiguously defined

Problem Description:

When creating a dataset, the database preview reports an error: "column ambiguously defined"

Cause of Error:

-

The column is not clearly defined. For example, the field queried by select exists in both tables in from, causing the database to be unable to distinguish which table the field comes from.

-

There are duplicate fields in the query. For example: select a.name, a.name

No such file or directory

Problem Description:

Case 1: After the dataset update task is canceled, the same dataset is updated immediately, and the error No such file or directory appears.

Case 2: Automatic update fails and reports this error, and the automatic update time is set at 1 am.

Cause of Error:

Case 1: After the user cancels the update task, a new update task is immediately performed. At this time, after the update task is canceled, all items in the directory are being deleted, but the task is displayed as finished, but it is still being deleted. When we update again, a new task is issued to create folders and files, but these folders and files will be deleted by the unfinished canceled task, resulting in the No such file or directory error.

Case 2: The BI system automatically deletes empty folders at 1 am every day. At the same time, when the dataset is updated, a new folder is created and then a new avro file is created. There is a small probability that the newly created empty folder will be automatically deleted by the scheduled cleanup task. Then the No such file or directory error will occur.

Solution:

Case 1 Solution:

-

Do not update the dataset immediately after canceling the task. Wait a few minutes before updating.

-

If this error occurs, try updating again after a while.

Case 2 Solution:

-

Set the automatic update time to avoid 1 am.

-

Configure the failure retry function. As long as it retries after 1 am, it can be updated automatically and the folder will not be deleted.

Cannot call methods on a stopped SparkContext.

Problem Description:

Task execution failed, error: Cannot call methods on a stopped SparkContext. Dataset update, ETL running, large batch error.

Cause of Error:

High disk usage.

Solution:

Check method: resource monitoring.

Contact operation and maintenance for intervention. See if the disk can be cleaned up. If not, consider a disk expansion plan.

java.io.IOException: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.ClassCastException

Problem Description:

Dataset update error: java.io.IOException: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.ClassCastException.

Cause of Error:

Type conversion exception. The original field type and the new field type are inconsistent.

Solution:

Check whether the dataset preview is successful and whether the previewed data has obviously unexpected fields.

total length XXX

Problem Description:

New TIDB dataset preview is successful, update error, error message is the entire SQL statement followed by total length XXX.

Cause of Error:

Syntax error caused by comments not being ignored. Check the log for the real error: You have an error in your SQL syntax; check the manual that corresponds to your TiDB version for the right syntax to use line XXX.

Solution:

According to this error, after deleting the comments in the SQL, the dataset can be updated normally.

Data volume exceeds allowed threshold

Problem Description:

Web Service dataset update error: Data volume exceeds allowed threshold.

Cause of Error:

To ensure the success rate of data updates and BI performance, the system limits the maximum size of web service dataset updates to 19M (that is, the data volume of a single update cannot exceed 19M).

Solution:

Modify fields to reduce content.

Disk I/O error: Failed to open HDFS file

Problem Description:

Direct connection dataset update error: Disk I/O error: Failed to open HDFS file.

Cause of Error:

The database cannot find the relevant file.

Solution:

Confirm the database connection information. First, confirm whether the database information has changed in the source database, try to execute the update SQL in the source database, and it is recommended to contact the internal database administrator for processing.

Error getting connection from data source HikariDataSource(HikariPool-10)

Problem Description:

Dataset update error: Error getting connection from data source HikariDataSource(HikariPool-10)

Cause of Error:

This error is usually caused by the database connection pool being full. It may be that the previous task connections were not completely released. Usually, as long as the data account and network environment are normal, manual update can be successful. If manual update also fails, you need to check whether the test connection of the data account is normal. If the test connection is normal, it means that the connectivity between BI and the client database is fine. Most likely, the BI connection pool is full. You need to check the specific log information during the error period.

Solution:

(1) If the business needs to see real-time data and does not want any cache: you can enable real-time card data-no cache on the dataset.

(But it should be noted that in this way, every time the business uses the report, it will directly send a request to the dataset, which will increase the pressure on the database)

(2) If a large number of dataset updates fail and the update time is too concentrated, you can stagger the set update times.

Packet for query is too large

Problem Description:

Error reported during dataset update/extraction: Packet for query is too large.

Cause of Error:

The built-in MySQL configuration parameter in BI is too low.

Solution:

Guandata operation and maintenance need to modify the built-in MySQL configuration. Please contact Guandata after-sales staff for assistance.

/guandata-store/table_cache/guanbi/*********` is not a Delta table.

Problem Description:

When configuring data extraction, the SQL preview is normal, but after configuration, this error appears in the overview: /guandata-store/table_cache/guanbi/********* is not a Delta table.

Cause of Error:

The data has not been fully extracted after configuration, and the task is still running, so this error pops up.

Solution:

Wait until the task is completed before checking again.

java.nio.channels.UnresolvedAddressException

Problem Description:

Preview is normal, but java.nio.channels.UnresolvedAddressException is reported when extracting the dataset.

Cause of Error:

Some HDFS address cannot be resolved. Preview only accesses 1-2 nodes, but full extraction needs to access many nodes.

Solution:

Troubleshooting Steps:

-

Check if the source database type is impala or hive.

-

See if java.nio.channels.UnresolvedAddressException is reported during extraction or preview (preview may be normal, but extraction will definitely report an error).

Contact developers to check the configuration.

Error retrieving next row

Problem Description:

Dataset update error: Error retrieving next row.

Cause of Error:

Timeout or network fluctuation caused failure to get the next row of data.

Solution:

-

Go to Task Management - View Task Details. If there is no fetch record, it means timeout. If it fails halfway through fetching, it means network fluctuation.

-

Enable the failure retry function in the dataset. It will automatically retry if it fails.

empty.max

Problem Description:

Error reported when uploading file: empty.max.

Cause of Error:

The row and column attributes in the file are incompatible/non-standard.

Solution:

Modify the file. Only pure data of rows and columns can be uploaded, do not include Excel styles (such as merged cells, etc.).

"Illegal conversion"

Problem Description:

After modifying the field type of the dataset, an "illegal conversion" error is reported during update.

Cause of Error:

It is not recommended to directly convert data types in the data structure. For example, changing STRING directly to TIMESTAMP is not supported.

Solution:

To convert an expression of one data type to another, you need to use a function to convert it in the database, commonly using the CAST function.

Query exceeded distributed user memory limit of XXX

Problem Description:

When adding a dataset with a large date range and a large amount of data, the error is reported: Query exceeded distributed user memory limit of XXX.

Cause of Error:

Generally, the SQL is too complex and there is not enough running memory.

Solution:

If the data volume cannot be reduced, see if the SQL can be optimized to reduce memory usage:

-

Do not select unnecessary fields, which can reduce memory pressure.

-

For SQL involving partition by, group by, order, join, etc., it is recommended to generate tables on berserker or create wide tables, and then query in BI. This will be faster and more reliable.

Exception: Memory limit (total) exceeded: would use xx GiB

Problem Description:

This usually occurs when the dataset occasionally reports an error, such as Exception: Memory limit (total) exceeded: would use xx GiB.

Cause of Error:

Insufficient memory/space.

Solution:

- Optimize queries: Check your query statements, try to reduce unnecessary calculations and data loading, and use more concise and efficient queries.

- Reduce data volume: If your data volume is too large, consider narrowing the query range or processing the data in batches to reduce memory usage.

- Increase memory: If your server configuration allows, try increasing the memory size to meet larger data processing needs.

- Contact Guandata customer service: If the above methods cannot solve the problem, it is recommended to contact Guandata after-sales staff for more detailed technical support and solutions.

Incorrect syntax near ";"/missing right parenthesis

Problem Description:

The same SQL executes normally locally, but BI preview reports: Incorrect syntax near ";"/missing right parenthesis.

Cause of Error:

The preview first stores the result of the input SQL query into a temporary table, and then takes the final result from the temporary table. Therefore, ending the SQL with a semicolon will cause a syntax error.

Solution:

Remove the semicolon at the end.

The maximum length of cell contents (text) is 32,767 characters

Problem Description:

Error reported when exporting dataset: The maximum length of cell contents (text) is 32,767 characters.

Cause of Error:

This is a feature of Excel itself. Because the number of characters in an Excel cell can only be within 32,767.

Solution:

It is recommended to process the data and try again. Reference document: https://blog.csdn.net/weixin_43957211/article/details/109077959.

null, message from server: "Host 'xxx.xxx.x.xxx'

Problem Description:

When creating a data account, the test connection reports: null, message from server: "Host 'xxx.xxx.x.xxx'.

Cause of Error:

Too many (exceeding the max_connect_errors value of the MySQL database) interrupted database connections from the same IP in a short period of time, resulting in a block.

Solution:

Contact the local database administrator to execute: flush hosts to clear the cache.

Domain name cannot be resolved, please ask the server administrator to confirm the DNS configuration: xxxx

Problem Description:

Error reported when adding an account in the data center: "Domain name cannot be resolved, please ask the server administrator to confirm the DNS configuration".

Cause of Error:

Network is unreachable.

Solution:

Modify the network configuration.

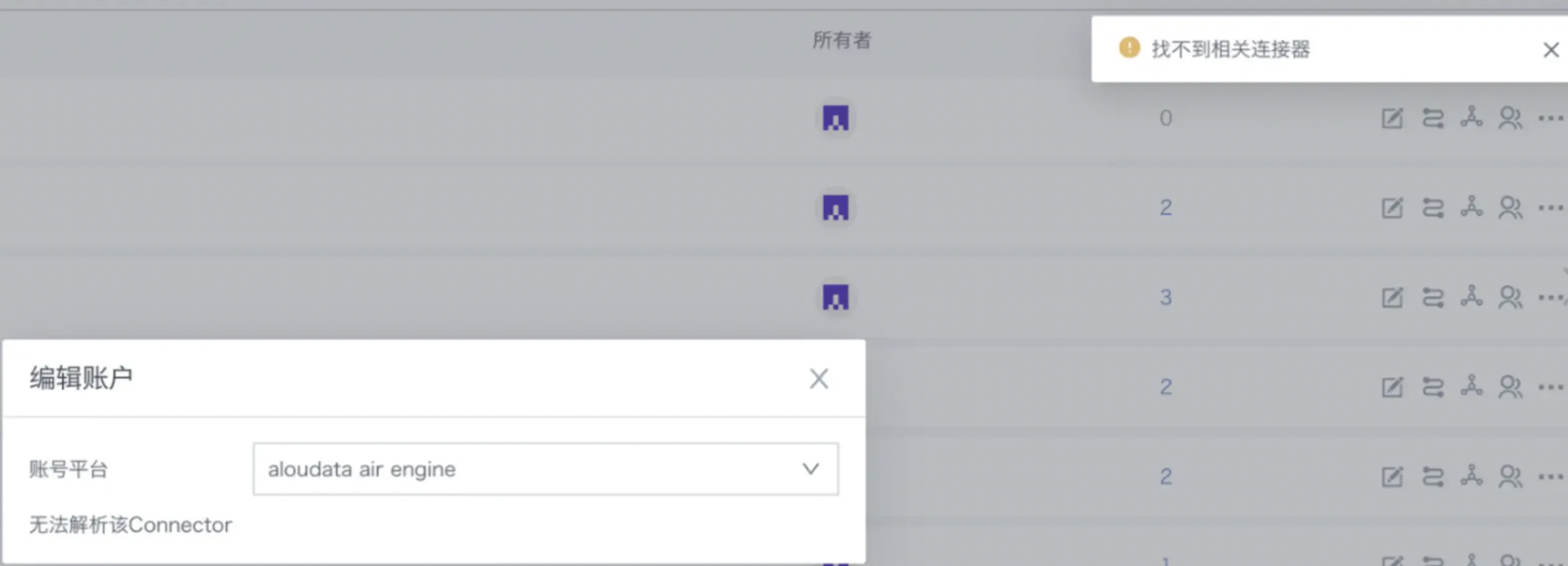

Cannot find related connector

Problem Description:

Unknown type appears in the data account list and the error "Cannot find related connector" is reported.

Some data accounts cannot be edited or lineage cannot be viewed.

Cause of Error:

There is a problem with the custom link.

Solution:

Restart the custom link switch. If it still cannot be solved, you can contact Guandata after-sales staff for further assistance.

Account verification error Custom driver path:/guandata-store/jdbcdrivers/XXX does not exist

Problem Description:

In the data account, after selecting a custom driver and entering the relevant information, click Test Connection and the following error appears: Custom driver path:/guandata-store/jdbcdrivers/XXX does not exist.

Cause of Error:

MinIO is not enabled, resulting in the custom driver path not being found.

Solution:

Contact Guandata operation and maintenance to enable MinIO, and then re-upload the custom driver. For details, please contact Guandata after-sales staff.

Cannot reserve XXX MiB, not enough space

Problem Description:

High-performance dataset error: Cannot reserve XXX MiB, not enough space.

Cause of Error:

The storage data disk where ck (clickhouse) is located is full. You need to increase the disk capacity, clean up unnecessary data, or mount it under another larger capacity disk.

Solution:

Expand the capacity. You may need to formulate a solution according to the actual situation. If necessary, you can contact Guandata operation and maintenance for assistance.

Connect to xxx.xx.xxx.xxx:xxxx [/xxx.xx.xxx.xxx] failed: Connection refused

Problem Description:

Connect to xxx.xx.xxx.xxx:xxxx [/xxx.xx.xxx.xxx] failed: Connection refused

Cause of Error:

Accelerated datasets generally have a large amount of data. Too much data (such as hundreds of millions to billions) may exceed the memory allocated to Clickhouse by the system, causing Clickhouse to restart, which in turn causes subsequent dataset acceleration tasks to report Connection refused when connecting to Clickhouse.

Solution:

Contact Guandata operation and maintenance to increase the memory configuration of Clickhouse on the server.

ClickHouse exception, code: 241, host: 10.250.156.78, port: 8123; Code: 241, e.displayText() = DB::Exception: Memory limit (for query) exceeded: would use 9.32 GiB (attempt to allocate chunk of 4718592 bytes), maximum: 9.31 GiB (version 20.4.5.36 (official build))

Problem Description:

ClickHouse exception, code: 241, host: 10.250.156.78, port: 8123; Code: 241, e.displayText() = DB::Exception: Memory limit (for query) exceeded: would use 9.32 GiB (attempt to allocate chunk of 4718592 bytes), maximum: 9.31 GiB (version 20.4.5.36 (official build))

Cause of Error:

The data volume is too large, exceeding the system default of 9.31G.

Solution:

Contact Guandata operation and maintenance to increase the memory configuration of Clickhouse on the server.

ClickHouse exception, code: 60, host: 172.16.171.254, port: 8123; Code: 60, e.displayText() = DB::Exception: Table guandata.f957aeedb2dcd4313812bad7_new doesn't exist. (version 20.4.5.36 (official build))

Problem Description:

ClickHouse exception, code: 60, host: 172.16.171.254, port: 8123; Code: 60, e.displayText() = DB::Exception: Table guandata.f957aeedb2dcd4313812bad7_new doesn't exist. (version 20.4.5.36 (official build))

Cause of Error:

The original temporary table was deleted, a new temporary table was created, and then new data was inserted into the table.

When two update tasks for the same resource are concurrent, it may happen that when one task is inserting new data, the next task happens to execute the operation of deleting the original temporary table, and at this time, the error that the table does not exist will be reported.

Solution:

Ensure that only one update task is running for the same resource at the same time.

Users should adjust the update frequency of datasets or ETL to avoid two data updates being too close in time.

If the number of partitions in a high-performance dataset exceeds 100, can it still be switched? If the partition parameter is not selected, will it affect the performance of the dataset?

Problem Description:

If the number of partitions in a high-performance dataset exceeds 100, can it still be switched? If the partition parameter is not selected, will it affect the performance of the dataset?

Cause of Error:

-

When converting a normal dataset to a high-performance dataset, you must select a partition (partition is also the underlying framework that enables high-performance datasets to query at high speed)

-

At the same time, too many partitions will also affect the query speed, so high-performance datasets have a limit of 100 partitions. If this limit is exceeded, it is not allowed to switch to a high-performance dataset.

Why do all related ETLs fail to run after converting the dataset to a high-performance dataset?

Problem Description:

Why do all related ETLs fail to run after converting the dataset to a high-performance dataset?

Cause of Error:

After the dataset is converted to a high-performance dataset, it is regarded as a Clickhouse direct connection dataset. Once it is used as an ETL input dataset, CK functions and spark functions will conflict. ETL cannot recognize the fields and row/column permissions created by CK functions in the dataset, resulting in ETL errors. Only datasets without row/column permissions and no new fields can participate in ETL.

Solution:

It is only recommended to convert large datasets used for final card production to high-performance datasets. Datasets that also participate in ETL processing should not be converted. If the dataset is used for both cards and ETL, it is recommended that the previous ETL outputs the dataset for ETL use, and then another ETL is created to output the dataset for high performance. One is converted to high performance for cards, and one is for ETL. See the figure below.

ru.yandex.clickhouse.except.ClickHouseException: ClickHouse exception, code: 210, host: xx, port: 8123; Connect to xx failed:

Problem Description:

When updating/converting/extracting a high-performance dataset, the error is reported: ru.yandex.clickhouse.except.ClickHouseException: ClickHouse exception, code: 210, host: 10.x.x.138, port: xxxx; Connect to 10.x.x.138:xxxx [/10.x.x.138] failed: connect timed out.

Cause of Error:

Timeout or configuration has been modified.

Solution:

First, check if there is a successful run record in history. If so, check the configuration and try again. If it still cannot be solved, contact Guandata after-sales for further troubleshooting.

Dataset update failed unknown error

Problem Description:

Unknown error means that after the update is successful, an error occurred when writing the historical task, resulting in the update being considered a failure. This is an occasional problem, and we will consider further optimization solutions.

3. Data Preparation

3.1. Smart ETL

Job Cancelled due to shuffle bytes limit, the threshold is XXX

Problem Description:

ETL run failed with error: "Job Cancelled due to shuffle bytes limit, the threshold is XXX".

Cause of Error:

If operations such as join, aggregation, and window functions are involved, Spark needs to partition the data by key, which will generate shuffle data and write it to disk. If the data volume is large, a large amount of shuffle data will put great pressure on the server's disk. Therefore, Guandata's own computing engine adds detection for shuffle usage. If it is found that the shuffle data written by a single ETL output task exceeds 200G (default configuration), the job engine will actively kill the task and return the relevant error.

Solution:

- For users with sufficient disk space, you can adjust the shuffle upper limit according to the disk size. You can set the upper limit according to 60% of the total disk of the user's BI, and try to ensure that the maximum disk usage meets the <85% condition. You need to contact Guandata for operation.

- Upgrade to the latest private version of the job engine (1.1.0+). In 1.1.0, we added judgment for disk capacity. Only when the disk usage is greater than 85% will shuffle usage detection be triggered.

- If the disk usage is already high, it is generally not recommended to adjust the shuffle upper limit. It is recommended to optimize ETL (such as splitting). This can control disk usage and meet the shuffle limit.

org.apache.spark.SparkException: Could not execute broadcast in 300 secs. You can increase the timeout for broadcasts via spark.sql.broadcastTimeout or disable broadcast join by setting spark.sql.autoBroadcastJoinThreshold to –1

Problem Description:

Error message: org.apache.spark.SparkException: Could not execute broadcast in 300 secs. You can increase the timeout for broadcasts via spark.sql.broadcastTimeout or disable broadcast join by setting spark.sql.autoBroadcastJoinThreshold to –1.

Cause of Error:

The data frame after join is large and takes a long time, while the default value of the spark.sql.broadcastTimeout configuration property is 5 * 60 seconds, that is, 300 seconds, which is far from enough. As the complexity of ETL increases, this error will occur frequently.

Solution:

Set the spark.sql.broadcastTimeout parameter to a larger value, such as config("spark.sql.broadcastTimeout", "3600").

Found duplicate column(s) ...

Problem Description:

ETL prompts that there are duplicate fields (Found duplicate column(s) ..., as shown in the figure), but in fact there are no identical fields.

Cause of Error:

Spark is case-insensitive to field names. In SQL, writing uppercase and lowercase is the same (for example, "FieldA" and "fielda" are considered the same).

Solution:

Check whether there are similar field names as the error field, modify and rerun.

Job 2632 cancelled because SparkContext was shut down, engine lost

Problem Description:

ETL run error: Job 2632 cancelled because SparkContext was shut down, engine lost.

Cause of Error:

Multiple large ETLs running at the same time cause high disk usage, causing the job engine to restart; memory overflow causes the job engine to restart.

Solution:

Clean up disk space, check resource configuration; stagger the running time of ETLs with long running times (CPU usage); analyze specific problems specifically.

Parquet data source does not support void data type

Problem Description:

All ETL nodes preview successfully, but the run error is: Parquet data source does not support void data type.

Cause of Error:

A field created with a null value has an invalid field type selected.

Solution:

Use a function to specify the type for null, such as cast(null as int).

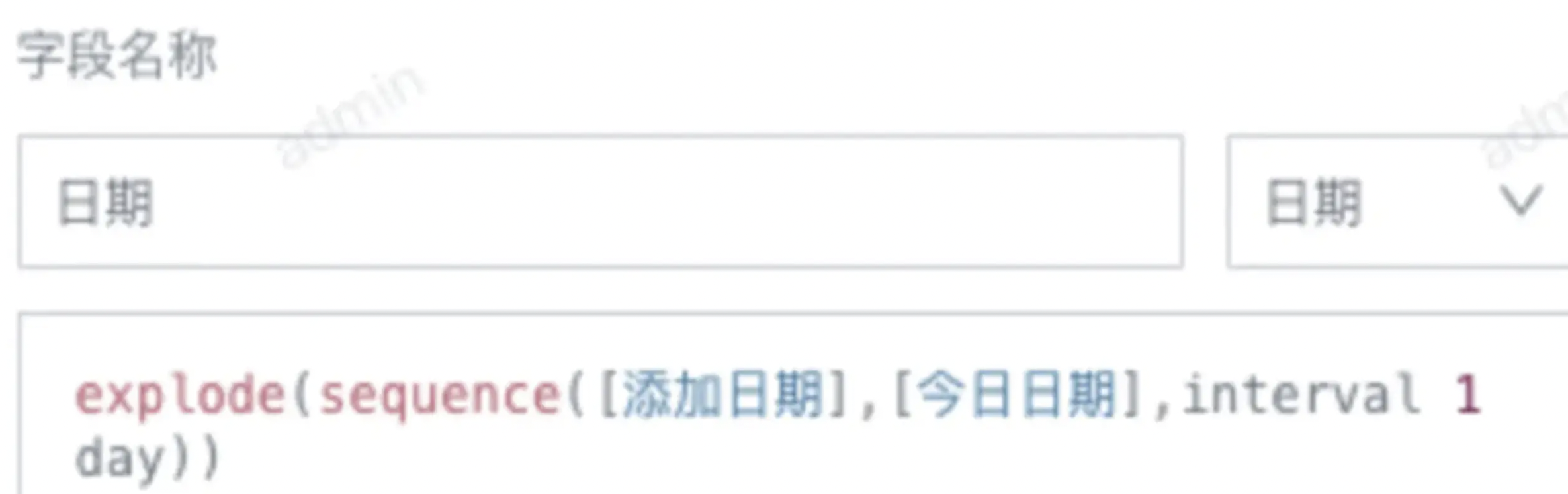

Illegal sequence boundaries: 19662 to 19606 by 1

Problem Description:

ETL run failed, error: Illegal sequence boundaries: 19662 to 19606 by 1.

Cause of Error:

The sequence function is used in ETL to expand by day (or month) between two dates. The start date should be less than or equal to the end date. If the start date is greater than the end date, both preview and run will report an error. 19662 and 19606 represent the start and end dates' unixdate, respectively.

Solution:

Check the end date to ensure that the end date is always greater than or equal to the start date.

Illegal sequence boundaries

Problem Description:

ETL run failed, error: Illegal sequence boundaries: XXX to XXX by 1. Occasionally, the preview is normal, but the run reports an error.

Cause of Error:

The preview only shows the first 200 rows, so abnormal data not in the first 200 rows cannot be found in time. Usually, the sequence function is used in ETL to expand by day (or month) between two dates. The start date should be less than or equal to the end date. As long as there is a row where the start date is greater than the end date, an error will be reported.

Solution:

Check the end date to ensure that the end date is always greater than or equal to the start date.

Job aborted due to stage failure

Problem Description:

ETL run error: Job aborted due to stage failure: Total size of serialized results of 2700 tasks (1024.2 MiB) is bigger than spark.driver.maxResultSize (1024.0 MiB).

Cause of Error:

The default value of spark.driver.maxResultSize is 1G, which refers to the total size limit of the serialized results of all partitions for each Spark action (such as collect). That is, the result returned by the executor to the driver is too large and exceeds the limit.

Solution:

Modify the spark.driver.maxResultSize parameter from 1G to 2G. If it is still not enough, you can add an output node in the ETL, first output the data set that can be merged, and then ETL will reuse the result of this node for subsequent logic.

cancelled because SparkContext was shut down

Problem Description:

ETL run error: Job *** cancelled because SparkContext was shut down.

Cause of Error:

Generally, when the task is running, the disk is too high, and the job engine is restarted.

Solution:

Clean up the disk to ensure there is enough available space; if it cannot be cleaned up, it is recommended that the customer expand the disk.

Field type mismatch

Problem Description:

When running/previewing ETL, the group aggregation operator reports "Field type mismatch: XXX".

Cause of Error:

Generally, the field type has been modified. As in the example above, the error is that the sales quantity does not match. At first, the "sales quantity" field input by the previous four SQLs of this node was of type long, so when it was passed to the group aggregation field, it was also of type long. Later, the SQL input was modified, or the type of the original data set changed, causing the "sales quantity" type to become double, resulting in a type mismatch.

Solution:

Enter the group aggregation operator, remove the problematic field, and drag it in again.

Cannot broadcast the table that is larger than XXX

Problem Description:

ETL update error: Cannot broadcast the table that is larger than XXX.

Cause of Error:

Exceeded the spark table broadcast join limit. A common situation may be that the join node has an invalid join that cannot match data, resulting in a large number of Nulls after the join (at this time, the data will expand greatly, easily exceeding the spark broadcast join limit - 8GB).

Solution:

Modify the join field to ensure that the field types are consistent and the join is valid.

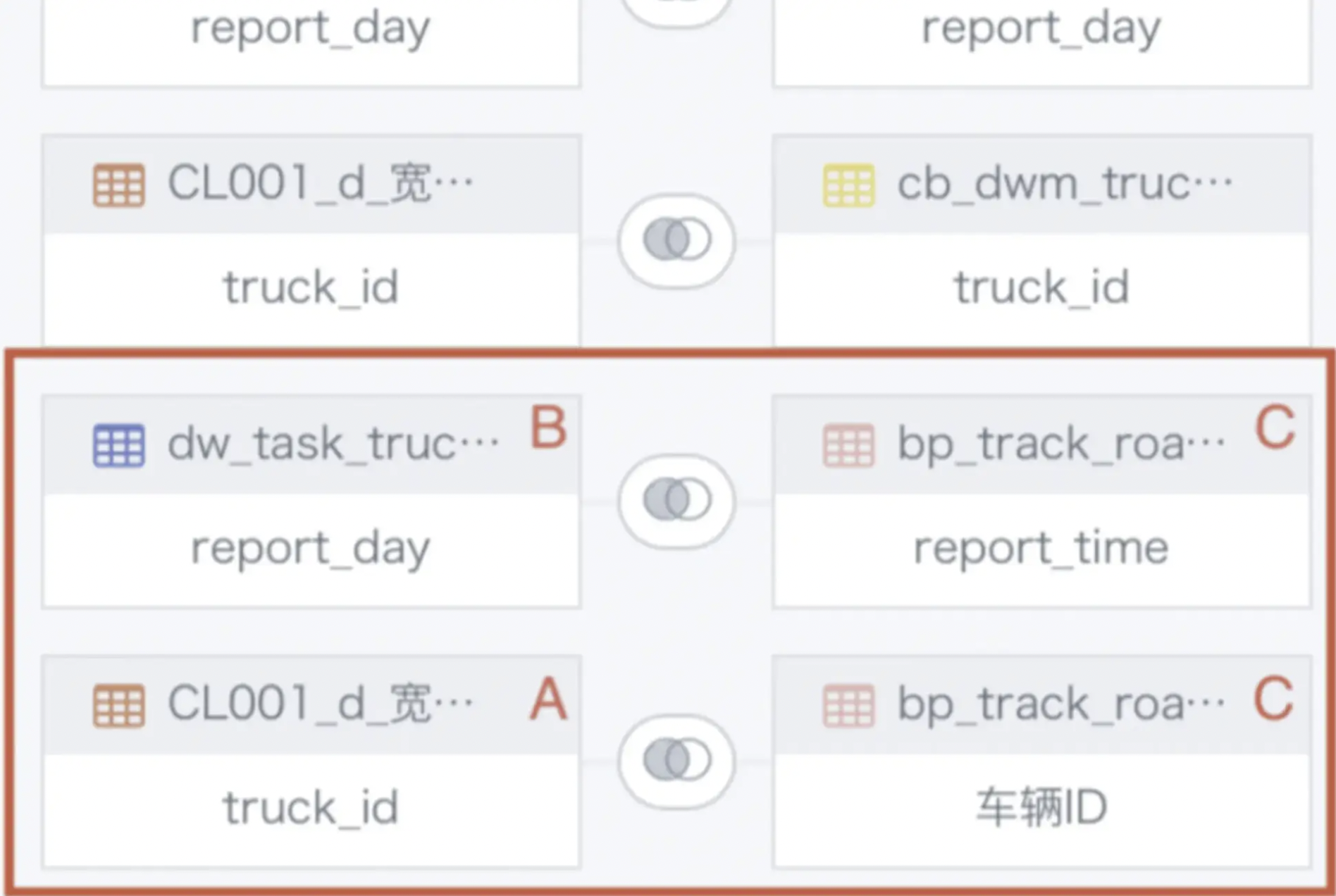

[message] = Reference 'id_1666072244947.truck_id' is ambiguous, could be id_1666072244947.truck_id, id_1666072244947.truck_id.; line 1 pos 0

Problem Description:

ETL join node error: [message] = Reference 'id_1666072244947.truck_id' is ambiguous, could be id_1666072244947.truck_id, id_1666072244947.truck_id.; line 1 pos 0.

Cause of Error:

Multi-table cross join. As shown above, because tables A and B have already been joined, you cannot join A and C again when B joins C.

Solution:

Only specify one table as the main table for the join to avoid multi-table cross join; for complex situations, it is recommended to split into multiple join nodes.

Problem Description:

ETL join node error: [message] = Reference 'id_1666072244947.truck_id' is ambiguous, could be id_1666072244947.truck_id, id_1666072244947.truck_id.; line 1 pos 0.

Cause of Error:

Multi-table cross join. As shown above, because tables A and B have already been joined, you cannot join A and C again when B joins C.

Solution:

Only specify one table as the main table for the join to avoid multi-table cross join; for complex situations, it is recommended to split into multiple join nodes.

Error in value replacement in ETL

Problem Description:

What are the possible reasons for value replacement errors in ETL?

Cause of Error:

It may be a field type issue. For example, if a numeric field is replaced with a text field, an error will occur.

Solution:

In this case, you can add a new field node before replacement to convert the field format.

ETL group aggregation node prompts missing field, but the field is not actually missing

Problem Description:

ETL group aggregation node prompts missing field, but the field is not actually missing.

Cause of Error:

Most likely, the field id or field type has changed. For example, the input data set field has changed (maybe the field was deleted and rebuilt, causing the field id to change), but the ETL retains the original field, and the two ids do not match, resulting in a missing field error.

Solution:

Drag the field again.

ETL run error Job timed out

Problem Description:

ETL run error Job timed out.

Cause of Error:

The corresponding Spark job of ETL timed out.

Solution:

The administrator can modify the maximum running time of the ETL task and the Spark single job timeout in Admin Settings > Operation and Maintenance Management > Parameter Configuration; if the running time is too long, it is recommended to optimize or split the ETL.

4. Data Analysis and Visualization

4.1. Card

"The current task has been cancelled, card: [xxx] may have a timeout problem, please optimize the card or try again later"

Problem Description:

"The current task has been cancelled, card: [xxx] may have a timeout problem, please optimize the card or try again later".

Cause of Error:

When the card runs longer than the threshold, the system will consider the card design unreasonable and stop running it to prevent a very performance-consuming card from consuming all system resources.

Solution:

- First, check whether it is a problem with individual cards or a large number of cards.

- If a large number of cards report this error, first consider whether there is a backlog of tasks. Check the task running status in the admin settings. If all tasks in the system are slower than before or there is a serious backlog and queue, you can contact Guandata after-sales for assistance.

- If it is a single error, check whether the card is reasonable, such as using very performance-consuming functions/advanced calculations, and see if there is room for optimization; on the other hand, check whether the data volume is large, whether some preprocessing can be done at the ETL level to improve performance. If there is no room for adjustment at the card level, consider whether the card parameter setting time is reasonable and needs to be increased; if there is no room for adjustment at the card level, consider whether the card parameter setting time is reasonable and needs to be increased.

Index xx out of bounds for length xx

Problem Description:

Card often reports error Index xx out of bounds for length xx.

Cause of Error:

There is concurrency in header calculation or secondary calculation. When the card access concurrency is high, this will occur.

Solution:

Upgrading to version 5.9.0 and above can avoid this.

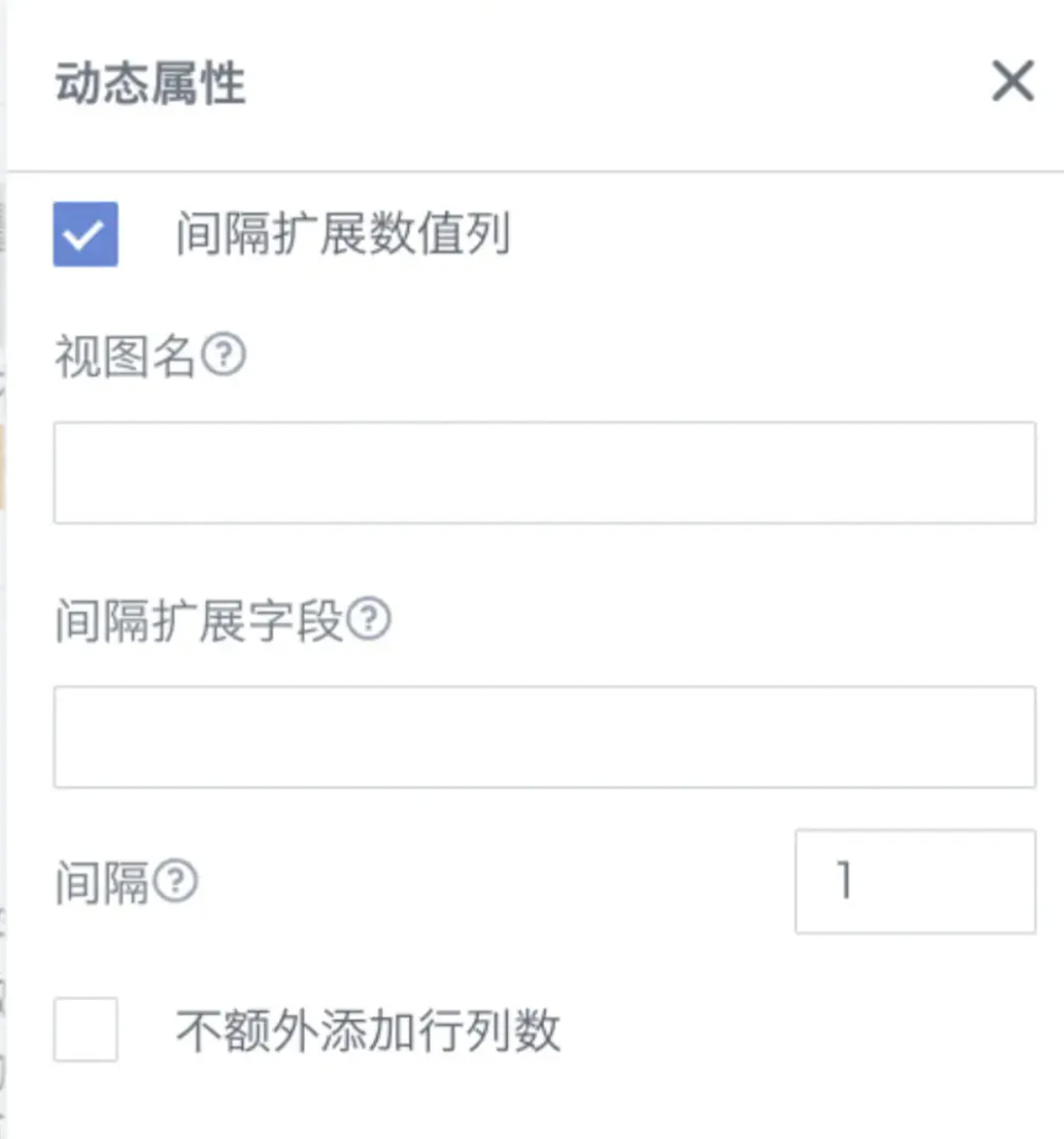

4.2. Complex Reports

Index 0 out of bounds for length 0

Problem Description:

Complex Report 2.0, compatible with LOOKUPEXP interval expansion for data without configuration.

Cause of Error:

In the template formula, interval expansion is checked, but the view name and field name for interval expansion are not configured.

Solution:

Configure the view name and expansion field for interval expansion.

5. Subscription Distribution and Alerts

5.1. Subscription

"An error occurred"

Problem Description:

The mobile terminal opens the subscription link and prompts "An error occurred".

Cause of Error:

The user does not have permission for the corresponding card or page.

Solution:

You need to grant the user visitor or owner permissions for the corresponding resource [optimized in version 5.6 and above].

key not found: scanAppld

Problem Description:

Subscription sent to DingTalk reports error: key not found: scanAppld.

Cause of Error:

Scan code login application information is not configured.

Solution:

Both scan code login application information and micro application information need to be configured. Currently, BI sends DingTalk messages mainly relying on the configuration information of the micro application, but the jump link in it needs to use the configuration information of the scan code login to achieve password-free login.

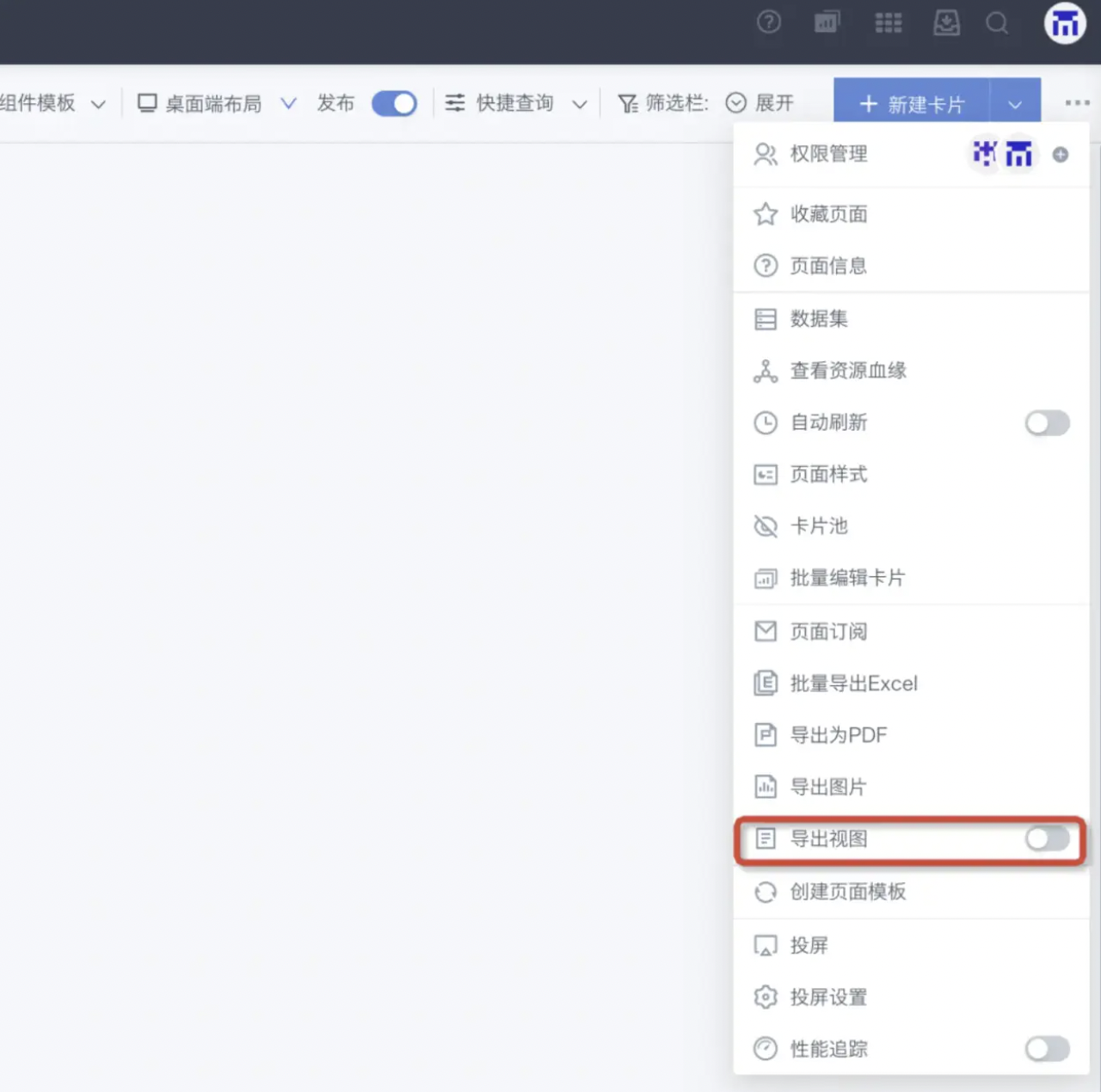

The width of the image sent by the subscription is inconsistent with the page

Problem Description:

The width of the image sent by the subscription is inconsistent with the page.

Cause of Error:

The export logic of the subscription page is the same as manual export, which is related to the export view settings.

Solution:

You need to check the display of the export view.

Occasional Export CARD/PAGE FAILED

Problem Description:

The error forms of such problems include but are not limited to Export CARD FAILED/Export PAGE FAILED, export failed due to load page 300000ms timeout: Timeout exceeded while waiting for event.

Cause of Error:

Export failure leads to subscription failure. Card or page data acquisition timeout/pdf service timeout/abnormal card or page on the dashboard.

Solution:

Adjust parameters. In the current situation, it is recommended to directly contact Guandata after-sales staff for assistance in evaluation and specific adjustments.

5.2. Alerts

Failed to upload image : errCode = 99991672, errMsg = Access denied.

Problem Description:

Alert to Feishu failed, error: Failed to upload image : errCode =.

Cause of Error:

Feishu application permission is missing.

Solution:

Configure permissions in the Feishu backend.

6. Open and Integration

6.1. OA Integration

Illegal request, please contact the application developer

Problem Description:

Feishu integration error: Illegal request, please contact the application developer.

Cause of Error:

Feishu password-free login will verify whether the source address of the request is the same as the callback address configured in the backend. If not, this error will be reported.

Solution:

- Check whether the domain name configuration in Admin Settings - System Management - Advanced Settings on BI is correct.

- Check whether the redirect url in the security settings of the development configuration of this application in the Feishu backend is configured as the BI domain name.

- If this error is reported when opening the application from the Feishu workbench, check whether the desktop and mobile homepage addresses in the web application configuration of the application capability in the Feishu application configuration are correct (if the domain name configuration on BI is correct, you can directly copy the link at the bottom of the Feishu configuration on BI).

Signature timestamp parameter timeout

Problem Description:

DingTalk login failed: signature timestamp parameter timeout.

Cause of Error:

The time of the timestamp generated by the server when logging in to DingTalk is more than 1 minute different from the DingTalk server time.

Solution:

Adjust the server time.

oapi.dingtalk.com: Name or service not known

Problem Description:

DingTalk integration error: oapi.dingtalk.com: Name or service not known.

Cause of Error:

The server's outbound IP has changed, causing the actual outbound IP to be inconsistent with the outbound IP configured in the DingTalk application backend.

Solution:

Modify the "Basic Information - Development Management - Server Outbound IP" in the DingTalk application backend to the actual outbound IP.

You do not have permission to log in to this application, please contact the company administrator

Problem Description:

WeChat Work application login prompt: You do not have permission to log in to this application, please contact the company administrator.

Cause of Error:

- The account is not within the visible range of the application.

- The wrong company was selected when scanning the code, and the account to be logged in is not under the selected company.

Solution:

First check the visible range of the application in the WeChat Work backend to confirm that the login account is within this visible range; then confirm that the company selected when scanning the code is the company you want to log in to.

no allow to access from your ip

Problem Description:

WeChat Work application login error: no allow to access from your ip.

Cause of Error:

In the WeChat Work backend application configuration, a new application will have a corporate trusted IP configuration. This configuration is not filled in or does not include the BI server's IP.

Solution:

You need to fill in the BI server's IP (if there are multiple servers, all need to be filled in).