ETL Data Result Accuracy Issues Summary

Common Issues

-

ETL preview data and result dataset are inconsistent, for example, data can be filtered in ETL, but there's no data in the result dataset;

-

ETL preview data and input dataset are inconsistent;

-

ETL calculated data is different each time;

-

ETL calculated data is incorrect.

Causes

1. Row and Column Permission Issues

Input datasets have row and column permissions set. In versions before 5.5, ETL preview is effective according to the ETL owner's row and column permissions, meaning ETL visitors can see data beyond their permissions during preview, but in the result dataset, they can only see data they have permissions for. So ETL preview data and result dataset inconsistency is normal.

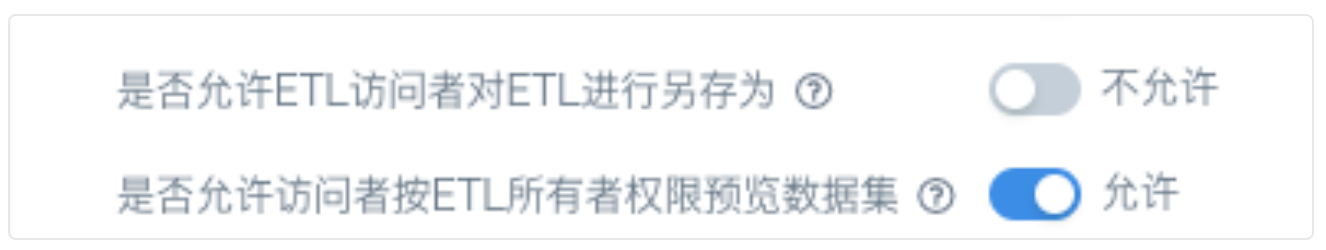

Solution: Starting from version 5.5, administrators can set "Whether to allow visitors to preview datasets according to ETL owner permissions" in "Administrator Settings-System Management-Advanced Settings".

2. ETL Running Lag

- ETL run time and ETL "Last Modified Time" are inconsistent: ETL logic was modified, but ETL wasn't run in time.

Solution: Use preview data to verify data when modifying, save ETL and run ETL in time.

- ETL run time and input dataset "Last Update Time" are inconsistent: There are multiple input datasets, ETL update logic settings are inappropriate.

Solution: It's recommended to use "Update ETL only after all checked datasets are updated"; if data timeliness is required and you need to set "Any checked dataset update will trigger", then ensure multiple input dataset update times are staggered, don't cluster together (time interval should be greater than ETL run duration, and leave room for queuing). Once multiple queuing tasks appear for the same ETL simultaneously, later duplicate queuing tasks will be automatically deduplicated and discarded.

Example: (Click to expand)

ETL is set to "Any checked dataset update will trigger", 5 input datasets update times are too close, causing the first run hasn't finished, new tasks have started queuing. Due to ETL queuing task deduplication mechanism, the ETL that should have run 5 times only ran twice, and the result dataset output is not the latest data.

3. ETL Preview Filtering

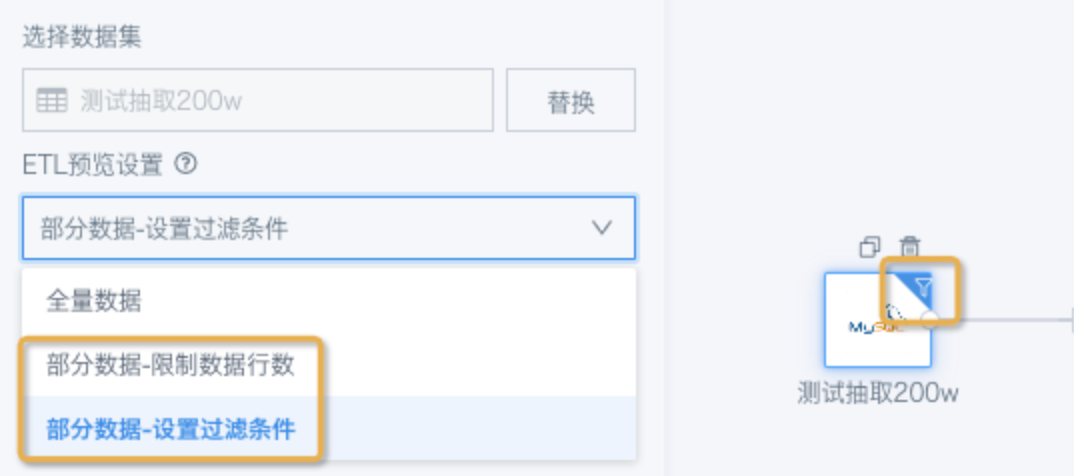

To improve preview speed and facilitate data verification, ETL supports "Preview Settings", only previewing partial data of input datasets. This setting doesn't affect result datasets, but users easily forget whether they've set it, leading to mistakenly thinking data is inaccurate.

Solution: Check if there's a funnel icon in the upper right corner of the input dataset node, and modify the setting according to needs.

4. Deduplication Calculation

Using deduplication nodes in ETL may have random effects on output results. During deduplication, corresponding deduplication primary keys are selected. For the same group of deduplication primary keys, if there are multiple rows of different data, the data after each preview or run may be different.

Solution: Choose appropriate deduplication primary keys, use "Deduplication" + "Select Columns" together; if you need to deduplicate according to certain logic, it's recommended to create new calculated fields to sort or mark multiple rows of data under the same group of primary keys, then use "Filter Data Rows" to filter data.

Example: (Click to expand)

Deduplicate according to the channel field, preview results from three nodes are all different.

Deduplication node preview result:

SQL Input (top) preview result:

SQL Input (bottom) preview result:

5. row_number Function

row_number() main function is to generate row numbers. Common usage is row_number() over(partition by [grouping field] order by [sorting field]). If using row_number to rank data, the feature of not supporting tied rankings will cause ranking to have randomness when data is the same.

Solution: Use rank/dense_rank to calculate rankings. For specific usage, please refer to Sorting Functions and Applications.

Example - Calculate node adds sorting for "Cumulative-Sales Quantity", using fields "Statistics Date", "Accounting Year", "Accounting Contract", "Product Wave" as partitions, getting sales rankings of different products on the same day.

Because "Cumulative-Sales Quantity" actually has duplicate fields, causing in the same partition, different products with the same sales quantity, their ranking order has randomness, and each time the result may be different.

6. Time Functions

Normal time functions like date, day, month won't have randomness. But if customers use now() and current_timestamp() in calculations, since these functions generate current time, results run at different time points are related to the BI server time.

Example - Calculate the difference from current date to a certain date date_diff(now(),[date])

Assuming the user's server time is consistent with China East 8 time zone, customers around midnight 12, the difference will be different. If the server is abroad but users are in China, users may encounter this problem during the day.

Solution: For servers overseas with time zone differences, it's recommended to use time functions to convert date and time for time zones. For functions, please refer to Spark Date Functions.

7. Floating Point Filtering

Because computer storage and calculation of floating point numbers are based on binary, this causes floating point numbers to inherently have certain precision errors. For floating point comparisons, computers use approximate comparisons, so when floating point errors themselves exceed the precision range, comparison results become inaccurate. For detailed introduction, please refer to Floating Point Accuracy Issues.

Solution:

-

Convert data to integer (int) for comparison. Integer comparisons don't have error situations.

-

Set a precision value you need to compare floating points: Assuming floating point precision is 0.00001, less than -0.00001 can judge this number is less than 0; similarly, judging floating point equals 0, can set condition as greater than -0.00001 and less than 0.00001; judging floating point greater than 0, can set condition as greater than 0.00001.

-

First use round(x) to get the number with the precision you want, then do filtering. Generally you can choose round(6), which is the same as BI's default display precision.

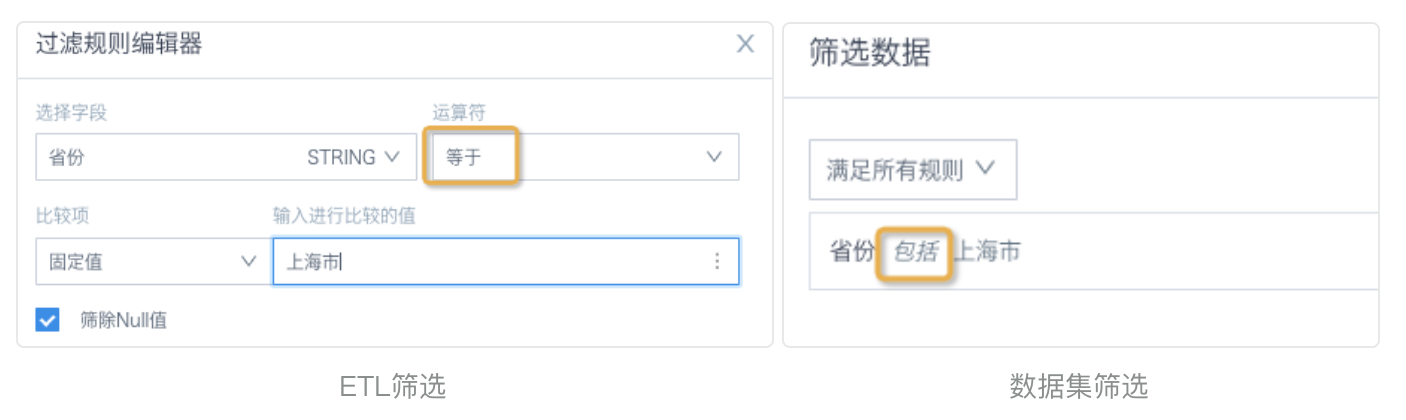

8. Filter Data Rows

Scenario 1: In ETL "Filter Data Rows" node, after selecting text type fields, the operator defaults to "equals"; in datasets, pages, cards filtering text fields, common practice is: check options from the list—after submission, the operator is "includes". If text field values have spaces before and after or other invisible symbols, the two filtering methods will have different results.

Solution: Change the filtering operator to "contains" in ETL, or use functions to remove spaces before and after text (refer to function trim()).

Scenario 2: When filtering conditions are "not equals, not contains" etc., ETL by default checks "Filter Null Values", which will automatically filter null values; when using "exclude" function in datasets and cards, null values won't be automatically filtered. This causes under the same filtering conditions, ETL data and directly filtering input dataset data to be inconsistent.

Solution: In ETL, consider whether null values need to be filtered, clarify the logic.