Floating Point Accuracy Issues

Background

Sometimes users encounter situations where floating point calculations or filtered preview results are different from expectations. Most of these are related to floating point precision issues. Based on binary storage methods, floating point numbers inherently have certain precision problems, and after multiple calculations, floating point precision issues become more apparent. Below, we'll introduce how to handle floating point precision.

Testing

1. Data Extraction

Create the following data in a CSV file:

col6,col7,col12,col6_2,col7_2,col12_2

0.999999,0.9999999,0.999999999999,0.999999,0.9999999,0.999999999999

1.000001,1.0000001,1.000000000001,1.000001,1.0000001,1.000000000001

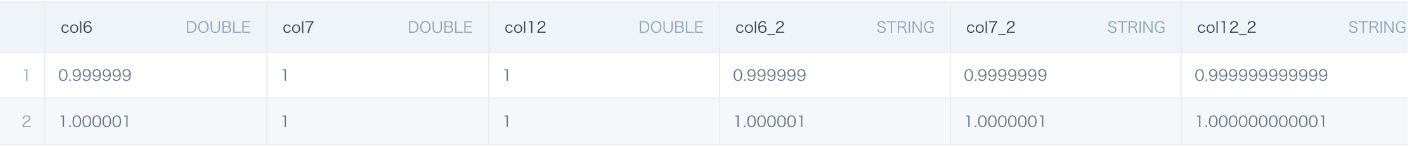

We upload the CSV to Guandata BI, select the first three columns as DOUBLE type, and the last three columns as STRING type. In BI preview, the actual displayed results are as follows:

If the floating point number in the dataset is 0.999999 (col6), the result after frontend preview will also be 0.999999. But if the dataset floating point number is 0.9999999 (col7, 7 decimal places), the preview result is 1.

Reason: In the Guandata BI system, floating point numbers have display precision. The default floating point precision is 6 decimal places, and the 7th decimal place is the error bit. The data stored at the Spark bottom layer has higher precision (refer to STRING type), and calculations and filtering are based on the bottom layer stored data.

2. Data Calculation

Based on the above dataset, create an ETL, keeping only the fields col7 and col7_2, subtract 0.5 from col7, then multiply by 0.1, then use round to get an approximation. Round will do 4-round-5-up based on the truncated next digit, retaining the specified decimal places.

We can see that both groups of data get 0.05 after direct calculation, but when doing round(1), the results are different. According to the original data calculation of col7_2, we expect the calculation result should be the column "string displayed as numeric", but actually the data stored in Spark should be the "string" data, with more than ten decimal places (double decimal part can be precise to 15 decimal places), and the last digit is the error number generated by calculation.

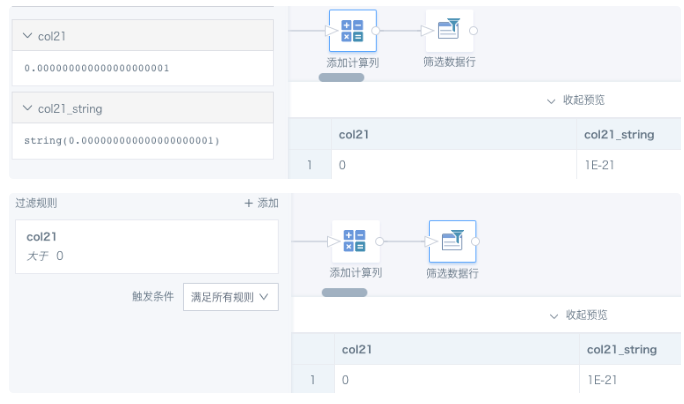

3. Data Filtering

Create a dataset containing the value 0.000000000000000000001 (21 decimal places), or create a new numeric type field in BI, both display as 0; convert this value to text STRING, it displays as scientific notation. As shown in the figure below, filtering col21 >0 can filter out this data.

From the above 2 experiments, we can conclude: For double type floating point calculations, data precision will change and produce errors, but when doing filtering comparisons, even decimals with more than 20 places can still be identified. So, the errors generated by our calculations will make floating point filtering results inaccurate.

Solution

First use round(x) to get the number with the precision we want, then do filtering. For precision selection, generally you can choose round(6), which is the same as BI's default display precision. If you know your data's original precision, you can also use the data's original precision, or convert the field to a higher precision decimal type. For example, cast([numeric] as decimal(15,6)), where 15 represents a maximum of 15 digits, with 6 decimal places.

For the first case above, if you don't use the round function to approximate the numeric value, wanting to filter out the 0.05 calculated from col7, you won't get results. Comparison of two filtering results is shown in the figure below.

FAQ

1. Why do integers converted to text with functions have decimal points?

Reason: In BI, when creating new calculated fields, selecting "numeric" type or performing mathematical calculations on field values, the output type defaults to double. The system rounds the data and retains 6 decimal places, then automatically removes trailing zeros. For example, if the data after rounding is 1.0, it displays as 1; if the data is 0.100000, it displays as 0.1.

Solution: If you need to always maintain as integers, you need to use functions to convert to integers, such as int()/cast( as int).

2. Why are rounding results incorrect?

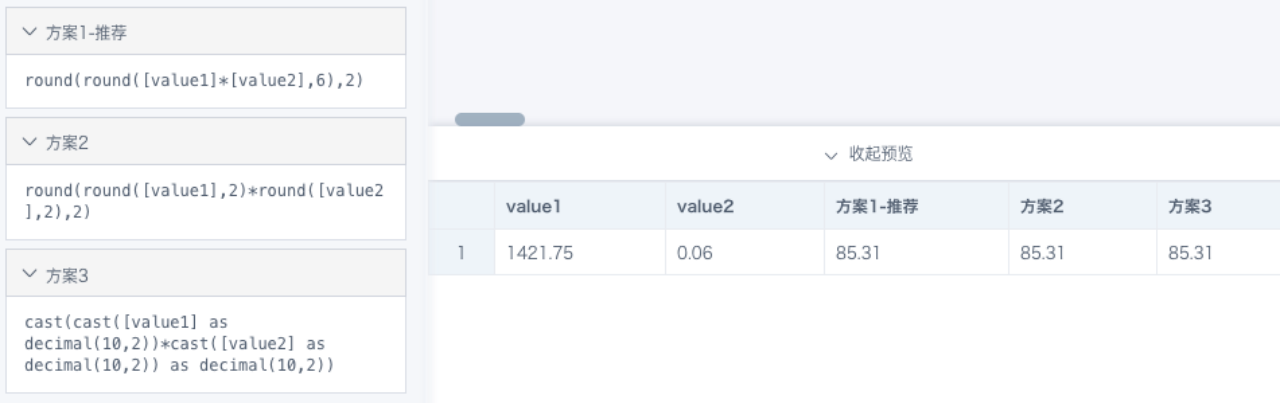

Case: For example, 1421.75*0.06=85.305, rounding to 2 decimal places should be 85.31, but after round(2) it's 85.3.

Reason: The values 1421.75 and 0.06 seen in BI are already rounded results, not representing the original accurate data. 85.305 is the result of rounding the calculation result, retaining 6 decimal places, equivalent to round(6), which has different precision from round(2), so the results cannot be guaranteed to be equal.

Solution: round(2) equaling 85.3 is also a reasonable calculation result; if you need to continue rounding based on 85.305, it's recommended to use round(round([numeric1]*[numeric2],6),2). There may be multiple implementation schemes, it's recommended to use flexibly according to actual situations (refer to the figure below).

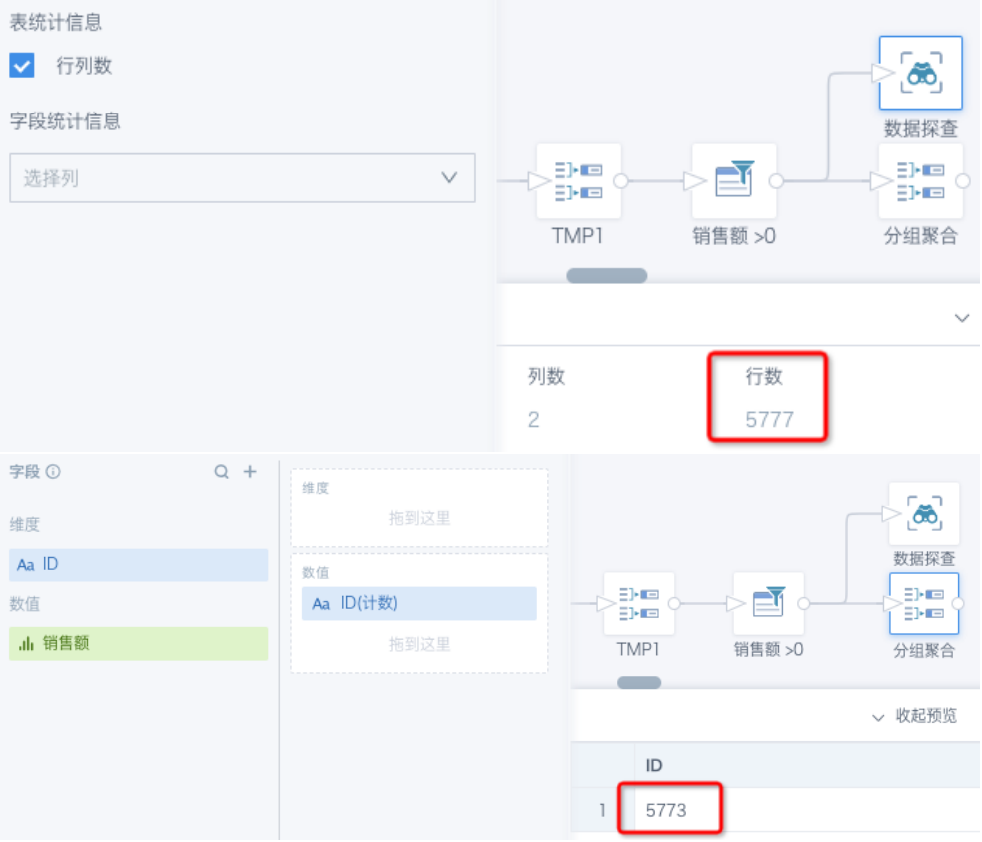

3. After filtering numeric>0, why do counting results change and become inaccurate?

Reason: Each new query requires recalculating and filtering the previous data. Each time, the data precision may have changes invisible to the naked eye, so filtering results may produce errors.

Solution: Specify numeric precision before comparison. For example, change to filtering [sales_amount]>0.000001, or round([sales_amount],6)>0, round([sales_amount],6)>0.000001.