count_if Function Usage Instructions

Scenario

In Excel, the COUNTIF function is a commonly used statistical function for counting the number of cells that meet a certain condition. The function syntax is =COUNTIF(lookup_range, lookup_criteria). In Spark SQL used by Guandata BI, there is also a similar count_if function that returns the number of field values that meet a certain condition. However, when users directly use this function, the system pops up the following error. Is it that Guandata doesn't support this function, or is it a usage error?

It is not allowed to use an aggregate function in the argument of another aggregate function. Please use the inner aggregate function in a sub-query.

Correct Usage

Guandata non-direct connection, non-accelerated dataset overview pages, cards, and ETL (all scenarios using Spark SQL) all support the count_if function. However, it needs to be used with window functions. For window functions, please refer to [Window Function Usage Introduction](1-Spark Functions/4-Spark Window Functions and Applications.md).

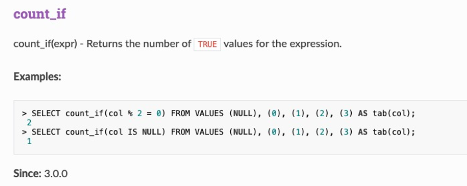

Official usage instructions: https://spark.apache.org/docs/latest/api/sql/#count_if

Example

Calculate the number of products with retail price over 100 yuan by product category. Drag the field to the value bar, select "Maximum" for aggregation method, and select "Calculate by aggregated data—Sum" for subtotal and total calculation method. The formula and calculation effect are shown in the figure below.

Note: The count_if function counts data without deduplication. If deduplication counting is needed, it's recommended to use the following alternative solutions.

Alternative Solutions

- Create a calculated field using case when/if to filter data that meets the conditions; drag the field into the value bar, select "Count" for aggregation method (select "Distinct Count" if deduplication counting is needed), and select "Calculate by original data" or "Calculate by aggregated data—Sum" for subtotal and total calculation method.

- If you need to use the deduplication counting result data for secondary calculation or filtering, you need to create a calculated field using the window function size(collect_set()over(partition by )) to calculate. If you need to display it in the report, drag it into the value bar, select "Maximum/No Processing" for calculation method, but note that with this method, subtotals and totals cannot be calculated normally; and in cases with large data volumes, window functions are resource-intensive and load slowly, so use with caution.