Using Time Series UDF to Build Historical Zipper Table

Are you still at a loss for how to store tens of billions of rows of historical snapshot data? Are you still frustrated by the snail-like query speed of historical snapshot data? Don't worry, Guandata brings you BI black technology—the historical zipper table solution, which will help you completely solve the above problems.

The term "historical zipper table" is not invented by Guandata. In fact, after years of practice and refinement by data warehouse builders, historical zipper tables have long been used in scenarios of massive historical data compression storage and query. However, building and maintaining zipper tables on relational databases generally requires multiple complex steps such as zipper initialization, opening, closing, and incremental updates. Each step requires users to be very clear about the operation logic of the zipper table and be very familiar with SQL. Therefore, in general, unless you are an experienced data warehouse engineer, it is difficult to master this method, let alone design zipper tables within a BI analysis platform.

However, the Guandata analysis platform, based on the Spark computing engine, completely simplifies the process of building and maintaining historical zipper tables, and summarizes and encapsulates a set of custom functions (time series UDFs) for building and querying historical zipper tables, allowing users to build historical zipper tables with just a few simple functions and support efficient queries.

Introduction to Historical Zipper Table

In the process of data warehouse construction, we often encounter a type of large data problem with the following characteristics:

- The overall data volume is large, such as inventory information at the store+SKU level.

- Some fields in the table are constantly updated over time, but the data updated each day is only a small part of all the data. For example, in the above example, the store+SKU combinations with sales may only account for 10% or even less of all combinations.

- Users need to view historical snapshot information at any point in time.

The most direct solution to the above problems is to store the full snapshot data of each day, provide a date primary key, and then open it for users to query. However, this actually saves a lot of unchanged information, which is a huge waste of storage. Moreover, improper design can seriously affect query efficiency and drag down the database.

For example, a chain pharmacy company with 3,000 stores and 1,000 SKUs would have 3 million records per day if storing inventory snapshots, 1 billion per year. If 5 years of historical data are required, nearly 5 billion historical snapshot records need to be stored.

To solve this problem, the historical zipper table was born. The historical zipper table is a table that maintains historical status and the latest status data. The data stored in the zipper table is actually equivalent to snapshots, but optimized to remove some unchanged records. Through the zipper table, it is easy to restore the customer record at the zipper point in time. The zipper table can reflect the historical status of the data and maximize storage savings and improve query efficiency. Next, let's see how the Guandata analysis platform helps you quickly build historical zipper tables and provide snapshot queries.

1. Building Inventory Zipper Table

Prerequisites

-

We need a historical inventory snapshot table, which generally contains date, product basic information, and inventory data (such as inventory amount). If you need to create a snapshot table in BI, please refer to [How to Create Data Snapshots with ETL](../1-ETL/4-How to Create Data Snapshots with ETL.md). If you have inventory transaction details (inventory flow), you can also skip the data snapshot step and create the inventory zipper table from the daily summary table of inventory flow.

-

You need to use Guandata's custom zipper table functions. The latest time series UDF function list is as follows.

-

Function usage instructions

- The dataset needs to have a date format field as the primary key. If using datetime (timestamp), the hour, minute, and second information will not be retained.

- Functions with "text" in the name are used to process date primary keys and text fields, other functions are used to process date primary keys and numeric fields.

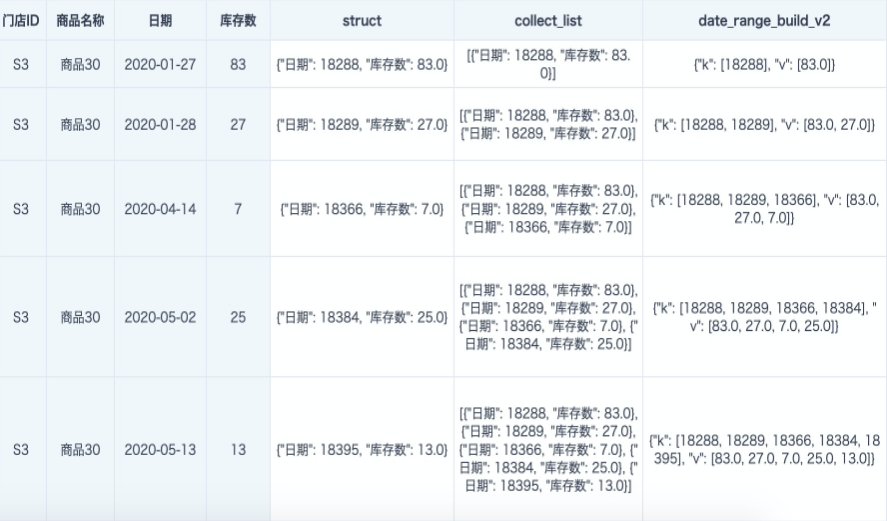

- Among the above functions, date_range_build_v2 and date_text_range_build_v2 first process the original date primary key and the result field to be queried (numeric or text field) into a struct_array array, which needs to be aggregated using functions like collect_list(struct(date, value)).

- The struct function is used to merge two columns of data into a key-value pair, and also converts the date to the computer's default Unix date (starting from 1970-01-01 as 0, then incremented by days).

- The collect_list function is used to merge multiple rows of data into one row by grouping, resulting in an array format. It needs to be used with group by (or window function over(partition by )).

- The date_range_build_v2 and date_text_range_build_v2 functions recombine the obtained struct_array to get a key-value pair, where k is an ascending array of dates and v is the corresponding inventory number array.

-

Step-by-step processing results and formats can be referred to as follows:

-

The dateRangStruct/dateTextRangStruct obtained by date_range_build_v2 and date_text_range_build_v2 can continue to be compressed, merged, accumulated by period, or queried using other time series UDF functions.

-

The old functions date_range_build(date_array, value_array), date_text_range_build(date_array, text_array) can still be used, but only in scenarios where there are no null values in the date and value fields (if there are nulls, they need to be replaced in advance). Because collect_list will discard null values, if there are nulls in the date or value, it will cause misalignment and loss of some data (if there is a null in the value, its corresponding date will correspond to the next date's value, and the last date will be discarded). Therefore, now use date_range_build_v2(dateRangStruct) and date_text_range_build_v2 instead of the old functions. Similarly, the original date_range_remove_adjacent_same_values(dateRangStruct) and date_text_range_remove_adjacent_same_values(dateTextRangeStruct) are now replaced by date_range_zipper(dateRangStruct) and date_text_range_zipper(dateTextRangStruct). We generally recommend using the new functions.

Implementation Steps: Taking Inventory Snapshot Table as an Example

- Import the prepared snapshot table into the Guandata platform, create a Smart ETL, and first aggregate the data to the required granularity.

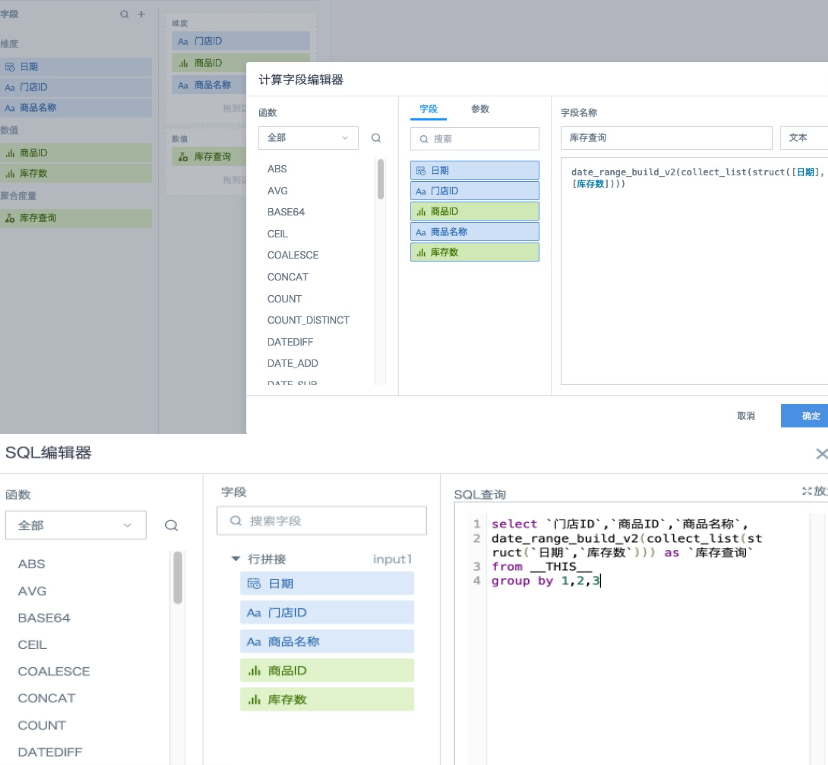

- Add a group aggregation node and add an aggregation field "Inventory Query" (type: text), then drag the required dimension fields into the dimension area, drag the aggregation field "Inventory Query" into the value area, and perform group aggregation calculation. The expression for this field is:

--Method 1: (Recommended)

date_range_build_v2(collect_list(struct([Date],[Inventory])))

--Method 2: If you prefer to use SQL to process data, you can also use the "SQL Input" node to handle this process. SQL expression:

select `Store ID`,`Product ID`,`Product Name`,

date_range_build_v2(collect_list(struct(`Date`,`Inventory`))) as `Inventory Query`

from __THIS__

group by 1,2,3

--Method 3: You can also try creating multiple new fields (all type: text)

Use functions struct(), collect_list()over(partition by ), date_range_build_v2() step by step to get the calculation result, then use a group aggregation node to aggregate the data

At this step, we can get an inventory zipper field, which has actually greatly compressed the snapshot data.

- Furthermore, for inventory data, some products may not change inventory for a long time, that is, there may be a large number of consecutive duplicate data in the inventory number array above. So we add a calculated column using the date_range_zipper function to further compress the inventory snapshot key-value pairs and build the inventory zipper field. The compression method: add a record with null value for missing dates and compress. If there are missing dates and the previous date value is not null, add a record with the adjacent date to the previous record and null value, and compare whether the current date is compressed with the newly added record; at the same time, remove duplicate adjacent equal value records, keeping only the first one. In short, it has two effects: remove duplicate data and fill missing time periods with null.

Based on these two effects, when using snapshot tables to create zipper tables, it is recommended to use the date_range_zipper function for deduplication; when using flow tables to create zipper tables, you do not need and should not use the date_range_zipper function for deduplication.

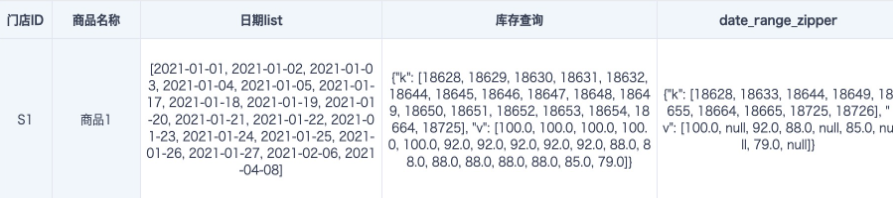

Using date_range_zipper([Inventory Query]) after data compression, the effect is as follows:

Legend explanation:

From 2021-01-01 to 2021-01-05, the inventory number is 100. After compressing 5 records, only one record for 2021-01-01 (18628, 100) remains. From 2021-01-06 to 2021-01-16, data is missing, dates are automatically filled, inventory is filled with null, and then compressed to one record for 2021-01-06 (18633, null), and so on until completion.

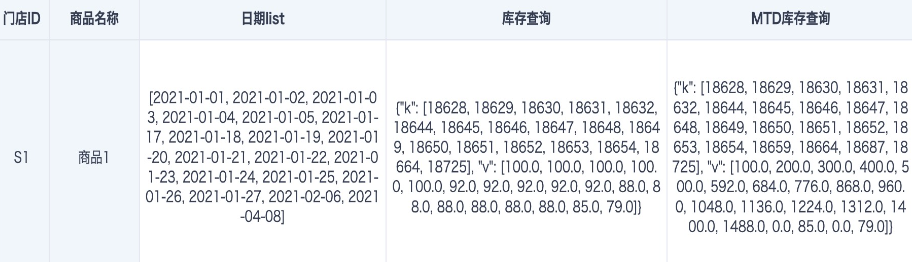

- If you need to do monthly cumulative (MTD) statistics for inventory, you can add a calculated column "MTD Inventory Query".

--In addition to monthly cumulative, also supports yearly cumulative ('year') and weekly cumulative ('week')

[MTD Inventory Query]:date_range_period_to_date([Inventory Query],'month')

Legend explanation:

There is data in January, February, and April 2021, and the inventory is accumulated by month. For example, the final cumulative value for January is 1488. From February, the first day of each month is automatically filled as the initial date, 2021-02-01 corresponds to 18659, inventory is filled with 0, 2021-03-01 corresponds to 18687, inventory is 0; April also automatically fills the initial date and inventory, but because there is no other valid data in March, and the data for 2021-03-01 (18687, 0) is adjacent and equal, the data for 2021-04-01 is deduplicated.

Note:

date_range_period_to_date function can only perform cumulative calculation on inventory values after date_range_build_v2, cannot process text data in date_text_range_build_v2, nor can it calculate on data compressed by date_range_zipper (there are null values for filling, null values cannot participate in calculation; and the data is deduplicated and not suitable for accumulation).

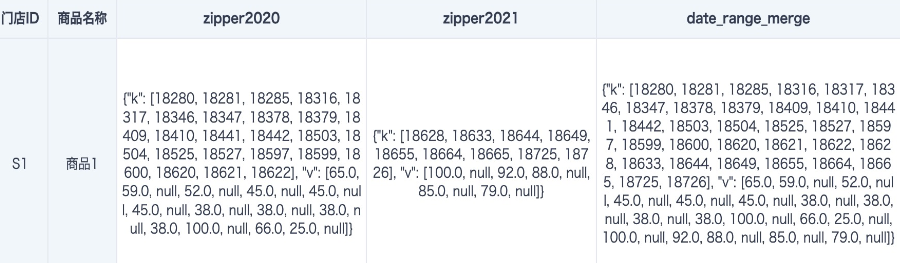

- If you have two inventory zipper tables for different years and want to merge them together to support cross-year queries, you need to first join the two inventory zipper tables by primary key, and then use the date_range_merge() function to merge the two inventory zipper fields without compression.

date_range_merge([zipper2020], [zipper2021])

- Add an output dataset to ETL, save and run, and get a historical inventory zipper table with drastically reduced data volume.

2. Inventory Zipper Table Query

After the historical inventory zipper table is built, how to query the inventory number for any date on the dashboard? Because there is no independent date field in the table, you need to use the function date_range_lookup or date_range_get together with global parameters for data query.

Implementation Steps

- Create or directly use an existing global parameter of date type in the system.

- Create a card, create a calculated field, directly click to reference the date parameter "Query Date" from the parameter list on the left, set the field type to "Date", and then drag it into the dimension bar.

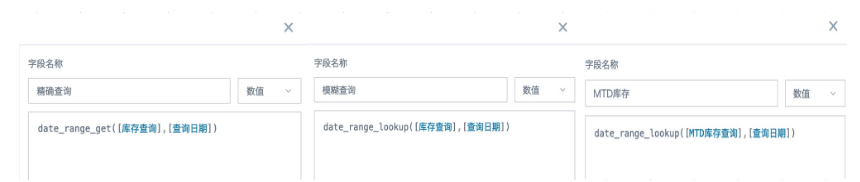

- Create calculated fields "Exact Query", "Fuzzy Query", and "MTD Query" as needed, set the field type to "Number", and then drag them into the value bar, select "No Processing" for aggregation method.

- Save the card and return to the page. Create a date type filter, check the global parameter "Query Date" used by the card when linking the card, and save. Or you can also create a parameter filter, reference the global parameter "Query Date" and save, the parameter filter will automatically link all cards on the current page that use the same global parameter. Other dimension field filters use ordinary selection filters for linking. The query effect is as follows.

Legend explanation:

On 2021-01-27, there is data, both exact and fuzzy queries can find the corresponding inventory number 88, and MTD inventory 1488; on 2021-01-28, there is no data, exact query has no result, rolling up finds the inventory number 88 and MTD inventory 1488 for 2021-01-27.