Create Data Snapshot with ETL

Background

Some fields in a dataset may change from time to time, such as product price, membership level, or event progress. If the source database does not store all historical information, or the data displayed on the dashboard is based on current-time aggregated data, how can we query past data status or record all data changes?

This is where data snapshots come in. A snapshot is a fully usable copy of a specified data set at a certain point in time (the start time of the copy), including the image of the corresponding data at that time. A snapshot can be a replica or a copy of the data it represents. Like a camera, a snapshot records that moment. The difference is that a snapshot can be restored to that moment, but we can never return to the moment in a photo.

Applicable Scenarios

If the data volume is small or only changed data needs to be recorded, it is recommended to use Guandata BI's built-in ETL to create data snapshots. For large data volumes, it is recommended to use a zipper table to store historical data (which requires UDF time series functions or view datasets, not covered here).

Implementation Method

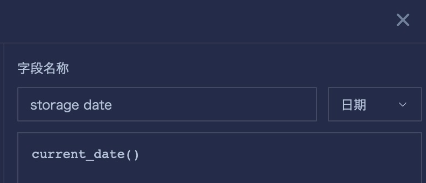

- In ETL, process and aggregate the original data as needed (group aggregation is recommended) to reduce data volume, then create a new calculated field "storage date" to timestamp the data. For daily snapshots, use current_date().

- Set the output dataset name and save path, then run the ETL.

- Return to the ETL editing page, temporarily disconnect the output dataset from the previous step (just delete the connection line, do not delete the output dataset).

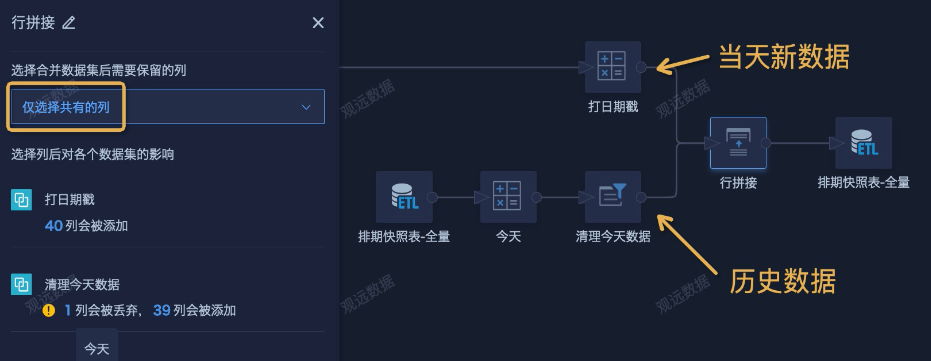

- Add a new "Input Dataset" node, select the output dataset from the previous ETL run. To prevent redundant data from multiple runs in one day, add a calculated column "Today" (function current_date()), then filter where "storage date" is less than today to clean up today's data first, i.e., pre-cleaning to get historical data.

- Use the "Row Concatenation" node to concatenate the latest data of the day and historical data, then connect to the original output dataset and save. Set up daily scheduled updates; generally, updating once a day is sufficient.

Case Sharing

A task scheduling snapshot table has been created as described above. Now, we need to filter out records where the task schedule changes each day and generate snapshots, so that the dashboard can display schedule changes within any time range and all tasks that have changed within a scheduling period.

Implementation Steps

-

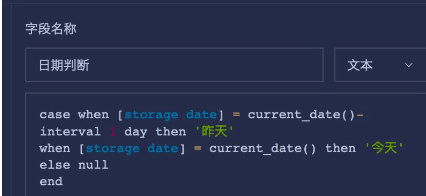

Create a new ETL, select the input dataset, and add a calculated column to determine today and yesterday.

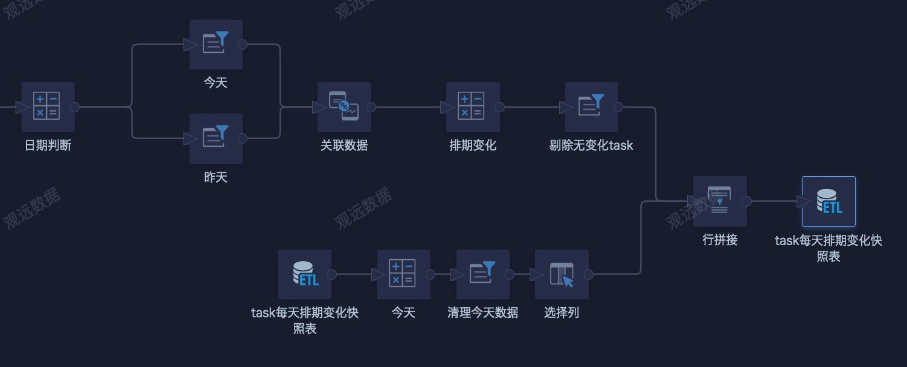

- Add two "Filter Data Rows" nodes to filter "today" and "yesterday" respectively, then left join "today" as the base table with "yesterday". Select only the required fields and rename as needed.

- Create a new field to compare yesterday's and today's data, determine if the schedule has changed, then filter out unchanged records, set the output dataset, save, and run.

- Reuse the output dataset from the previous step as the input dataset, concatenate with the original data table, save, and run the ETL again. Set up daily scheduled updates. After the first run, only the data for the current day will be available. As it runs daily, all schedule changes will be recorded and saved, completing the snapshot table.

- Create dashboard cards, use the date filter to link to the new date stamp field (storage date) in ETL, and use global parameters for cross-field search of schedule information by creating new fields.

- The final page display is as follows:

Snapshot Table Maintenance

Try to check data quality and determine the final fields to keep after the first ETL run and before adding row concatenation. If you modify the logic or add/delete fields after running for a while, you need to modify both branches before the "row concatenation" in ETL.