Pre-cleanup Rules Usage Instructions

Scenario

Considering that fact tables in enterprise data warehouses may change after being extracted to the BI platform, such as corrected data or removal of dirty data, etc., it is often necessary to first clean the fact tables extracted by BI according to certain rules, such as deleting data from the last 7 days, then re-extracting data from the last 7 days, to ensure that the data extracted by BI maintains consistency with the data in the internal enterprise data warehouse. Such fact tables usually have large data volumes, at the million level. If full re-extraction is performed, it will be time-consuming and resource-intensive. Therefore, we have added a pre-cleanup rules editor for Guan-index type dataset data updates, making it convenient for data managers to clean data according to specified rules before extraction.

Or, after incremental updates for a long time, the data volume of extraction datasets becomes larger and larger, causing subsequent ETL run times to become longer and longer, but actually only historical data within a fixed time range is needed. Previously, historical data could only be manually cleaned through the "Data Cleanup" function, but now it can also be achieved by setting pre-cleanup rules to implement dataset slimming, such as automatically clearing historical data from 1 year ago, so that the current dataset always only saves data from the last 1 year.

Path

Extraction dataset "Data Update" tab, after checking "Incremental Update", "Pre-cleanup Rules" automatically appears below.

Usage Method

- First set up the deduplication primary key and incremental update SQL statement and preview to ensure the data is correct.

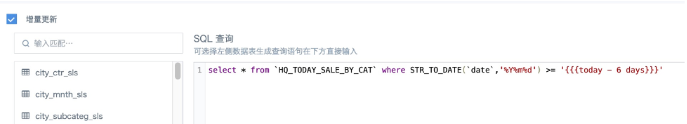

For example, you need to incrementally update data from the last 7 days on schedule daily. If the date field itself is in date/datetime format, you can directly compare it with the time macro in the where condition, refer to the figure below:

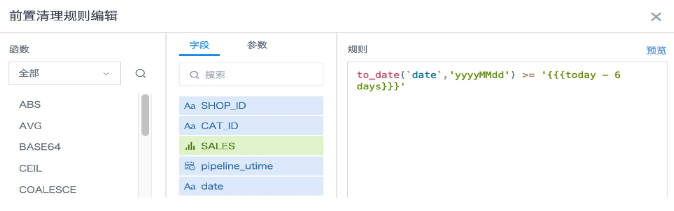

- Check "Pre-cleanup Rules", write the same time filtering conditions as in incremental updates in the popup settings box, preview to ensure the data is correct, click "OK", then click "Apply" in the bottom right corner of the page to save the settings.

Notes

In the above scenario, the date field is in date/datetime format, and date-time judgment uses time macros without using any functions, so the pre-cleanup rules can directly copy and paste the condition statement after where.

However, when function formulas need to be used, the condition statement cannot be copied as is. Because "Incremental Update" queries data directly from the user database and must use SQL functions corresponding to the database; "Pre-cleanup Rules" delete data already stored on the BI server and must use Spark SQL functions. "Data Cleanup", "Row and Column Permissions", "New Calculated Fields", etc. are the same, all processing data already stored on the BI server, requiring Spark SQL functions.

For example, the field "date" in the MySQL extraction dataset is a text type field (String) in the format 20220228. In incremental updates, you can use DATE_FORMAT(date,'%Y-%m-%d') or STR_TO_DATE(date,'%Y%m%d') to convert the date format and compare with the time macro.

But in "Pre-cleanup Rules", the Spark SQL syntax is like this: to_date(date,'yyyyMMdd') >= '{{{today - 6 days}}}'. Different database functions are different, even if the same function is universal across multiple databases, the specific usage will also have differences. For example, the letters y/m/d representing year/month/day have different meanings in different databases, and cannot be mixed randomly.